Sponsored By: HubSpot

Use AI as your personal assistant.

Ready to embrace a new era of task delegation? HubSpot’s highly anticipated AI Task Delegation Playbook is your key to supercharging your productivity and saving precious time.

Learn how to integrate AI technology into your processes, allowing you to optimize resource allocation and maximize output with precision and ease.

Was this newsletter forwarded to you? Sign up to get it in your inbox.

I’m a child of divorce, so I’m pretty good at reading between the lines. Divorced parents always say things about each other to you without actually saying them.

Learning to interpret my parents as a child comes in handy for me as an adult at tech conferences where the good stuff, the really juicy stuff, is all in the subtext.

After spending two days at Microsoft’s developer conference, Build, watching CEO Satya Nadella, CTO Kevin Scott, and surprise guest Sam Altman speak, the most important thing that wasn’t said but was strongly hinted at was this:

GPT-5 is coming, and it’s going to be amazing.

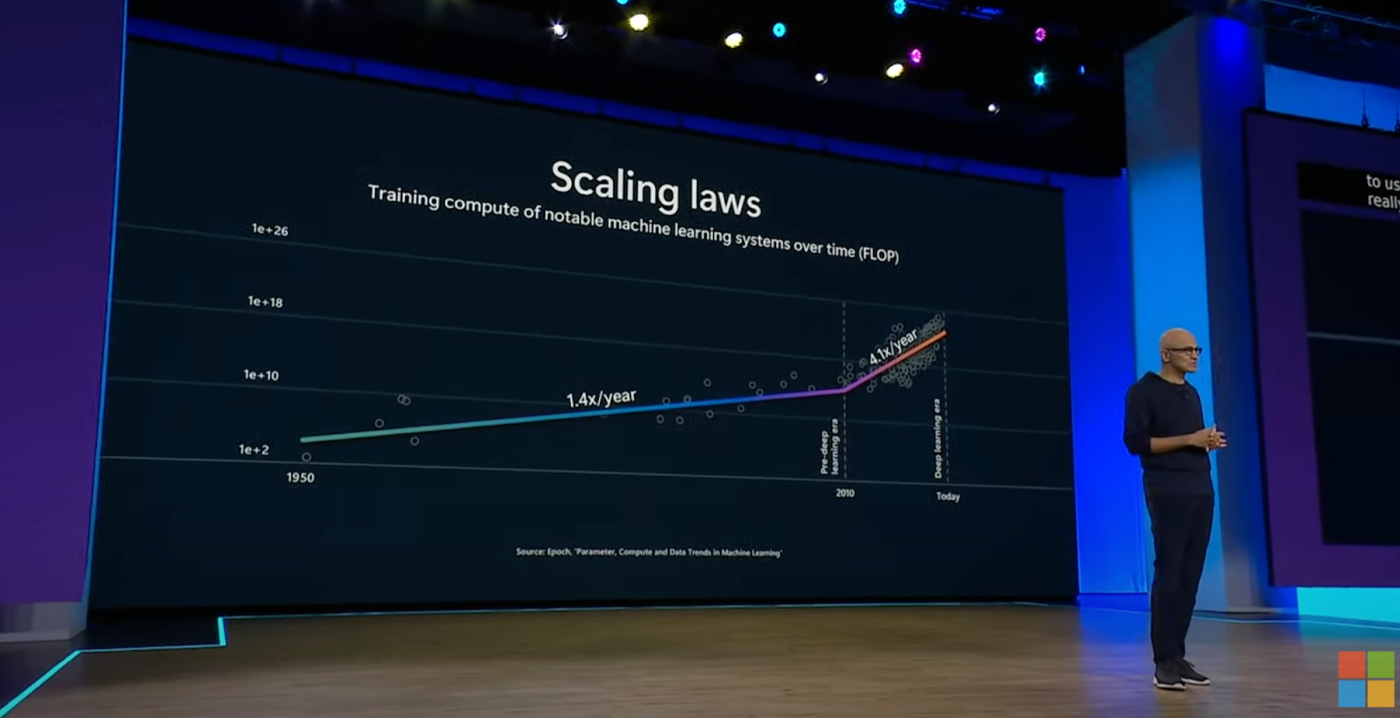

How did I hear them say this without saying it? Every major presentation at Build, executives talked extensively about scaling laws.

Scaling laws created the AI wave. About five years ago, OpenAI realized that if you increase the amount of compute and data that you run through a model, you can predictably increase its level of intelligence. The company has harnessed this core insight to take it from the first GPT model all the way through to its GPT-4o announcement last week.

The big question for OpenAI—and everyone else—is how long these laws will hold. Will we hit an asymptote or a point of diminishing returns? In order to create the next model, will we need a galactic Death Star-level of compute that humanity can’t yet assemble? Will we run out of usable data? If so, when?

This question is even more critical because competitors are starting to catch up. Google and Anthropic have both released GPT-4 class models in the last few months, fueling suspicion that scaling laws are starting to taper off. Maybe, theories say, OpenAI hasn’t released a new frontier model because it can’t.

Enter Microsoft. Here’s Satya Nadella in a presentation the day before the Build conference, where the company announced its new AI-enabled Windows laptops, Copilot + PCs:

Source: YouTube.Here’s another one the next day at his Build keynote:Source: YouTube.That same slide flashed 2-3 more times during the Build keynotes of other presenters. And even if your parents are happily ensconced in a marriage more secure than Fort Knox, you should be able to know what they mean:The scaling laws are holding up.

It’s clear that Microsoft has been working on some massive new models and is pretty confident about the results. That’s the only reason they’d be willing to put the words “Scaling laws” in letters the size of Satya Nadella’s torso on a slide.

After the keynotes, I was lucky enough to join a small conversation with possibly the most likable man in AI, CTO Kevin Scott. He deepened my impression even further.

Scott talked about how expensive it is for Microsoft to build and run the supercomputers that train and operate these language models. “If we were at the point of diminishing marginal returns, we would’ve been fired because of the capex,” he said.

At another point, he cautioned against the idea that AI progress is linear. While it might seem that way, it is only because the “big updates [e.g., new models] tend to happen every two years.” So it might look like progress has been relatively minor recently, but that’s because it takes a really long time to build a big new supercomputer to train the next big model, and then it takes another chunk of time to get the model ready to be used in a production environment. While we’re speculating about the demise of scaling laws, that’s all happening behind the scenes.

GPT-4 was released in March 2023. If Kevin Scott’s two-year rule holds, GPT-5 should be released by March of next year—nine months from now.

Mark your calendars.

Three items of note

AI all the things

Microsoft is building AI into every layer of its stack. The company is building everything from supercomputers to train frontier models, to new AI-first features in Windows, to developer tools that allow anyone to make AI applications. It’s a full-court press on AI.

Startups at risk: None—this is probably good for them.

Total Recall

On Monday, Microsoft announced new Copilot + PCs—laptops that are built to run a Windows-integrated AI copilot that can see your screen, talk to you over voice, and help you with day-to-day tasks.

One of the more notable aspects of this product launch was Recall, a feature that records everything that you do on your computer and allows you to easily surface it later. Recall runs locally, gives you control over what gets recorded, and is designed to not slow down your computer.

Startup at risk: Limitless, which offers basically the same functionality in an app

Agents are the future of software

In our conversation, Scott noted that he thinks “agent” interactions—like the ones that Copilot enables—are the future of software. “One of the things that will probably happen is that you’re going to be using agents more than you’ll be using [apps or websites],” he said.

This view of the world is likely why Microsoft is making AI copilots such a core part of its strategy. At Build, they released a Copilot Studio product that allows anyone to build their own copilot products on their own data and with their own custom workflows. It’s a little bit like their version of Custom GPTs.

They also announced what they call Team Copilots, which can be added to Microsoft Teams—or any other Office-suite products—and record meetings, take action items, and help you manage your projects.

Startups at risk: AI meeting recorders, apps, and websites that don’t adapt

Venue review: A room for quiet time!

There were many things to recommend the Seattle Convention Center. It had a giant room that could fit 5,000 people with a stage up front. It had well-appointed media breakout rooms with couches and comfy chairs equipped with cute side tables for your laptop or your snack. Its fixtures and furniture were bright, clean, and new. Its staff was friendly.

But by far the best part of the venue: They had a room for quiet time. They sectioned off a large room with reclining chairs and dim light where talking was kept to a minimum.

It was the perfect place for a writer on a deadline to hide from his computer (definitely not speaking from personal experience).

These kinds of events are hard to pull off, and the staff did a terrific job being thoughtful about all of the details.

My final rating: 8/10

Food review: Starbucks, chicken tikka, apples

Now, to the most important part of this article: the food.

I’d rate the food at Build a cut above most large corporate conferences.

For lunch, I tried the chicken tikka masala bowl. The chicken was juicy and well cooked, and there was ample masala sauce, a lot of rice, and charred cauliflower. It wasn’t warm enough, though, so I have to reluctantly deduct some points.

Starbucks coffee and a bevy of tasty options like apples with peanut butter were on offer at snack time. It felt thoughtful and comfortable. My only complaint was there weren’t enough non-soda drink options.

My final rating: 7.5/10

My conversation with Kevin Scott below is for paid subscribers.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools