Look, here’s what we’re not going to do. We’re not going to freak out. We’re not going to prognosticate about utopia or predict doom. We’re going to keep our heads on straight and…

DID YOU FREAKING SEE SORA????

OpenAI’s new text-to-video model can generate a 60-second photorealistic video of a pair of adorable golden retrievers podcasting on a mountain top. It can generate a video of Bling Zoo, where a tiger lazes in an enclosure littered with emeralds and a capuchin monkey wears a king’s crown behind a gilded cage. It can generate a video of an AI Italian grandmother wearing a pink floral apron and making gnocchi in a rustic kitchen. (Her hands look a little like the hot dog fingers from Everything Everywhere All at Once, but still—that’s a movie, too!)

It’s wild. It’s incredible. It caused Mr. Beast to tweet at Sam Altman: “Please don’t make me homeless.”

There’s a line from a Chekhov story that reads, “I understood it as I understand lightning.” He might as well have been talking about Sora. The demos struck me bodily, like electricity.

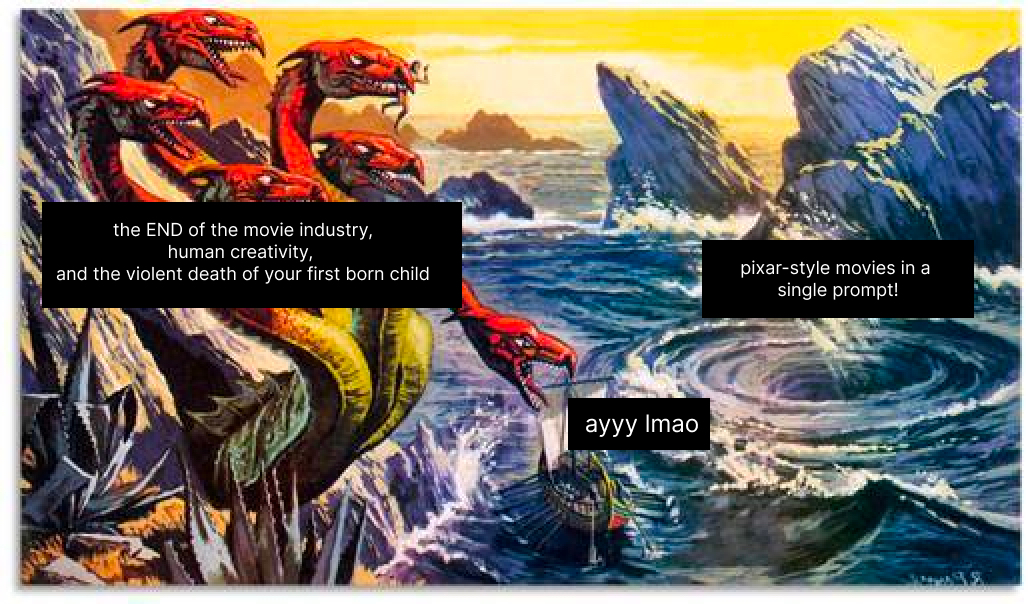

Phew. I’m glad I got that out of my system. It was important to say that because writing about a buzzy new drop from OpenAI is a little bit like sailing between a Scylla and Charybdis of the mind:

Meme format inspired by Visakan Veerasamy.In one part of my brain are the multi-headed dragons of doom telling me to strafe the freaking data centers before the movie industry combusts like Mel Gibson’s career. In another part is the techno-optimistic quantum whirlpool of excitement already planning the Pixar-style movie I will create as soon as I get my hands on this model. The world will finally see me as the undiscovered heir to George Lucas that I secretly always knew that I was—lack of ever having made a film be damned.

The problem is, I know both parts of my brain are wrong. Ayyy lmao.

My brain is mistaking newness for something that it’s not. The feeling I’m getting from watching these demos is not the one I get from watching a great movie, YouTube video, or TikTok. Why? I know, in time, the newness of these demos will fade, and they’ll become normal—mundane, even. I’ll no longer get excited by them. But a well-crafted movie will continue to be compelling.

The best way to keep a level head about advances like these is to think of them in terms of long-term trends. Sora in particular, and AI filmmaking in general, is an extension of two important ones:

- Tremendous amounts of data and compute being used to generate mind-blowing AI breakthroughs

- Technology driving down the cost of filmmaking

Let’s talk about both of them.

How Sora uses massive amounts of data to make mind-blowing video clips

AI runs on scale: More data and more compute means better results. Sora is impressive because OpenAI figured out how to throw more data and more compute at text-to-video than anyone else has before. Here’s a simplified version of how the company did it based on what I can glean from its whitepaper.

Imagine a film print of The Dark Knight. You know what I’m talking about: the reel of cellophane wound around a metal disk that a young man in a red blazer hooks up to a projector in an old-style movie theater.

You unroll the film from its scroll and chop off the first 100 frames of cellophane. You take each frame—here the Joker laughing maniacally, there the Batman grimacing —and perform the following strange ritual:

You use an X-acto knife to cut a gash in the shape of an amoeba in the first frame. You excise this cellophane amoeba with tweezers as carefully as a watchmaker and put it in a safe place. Then you move on to the next frame: You cut the same amoeba-shaped hole from the same part of the next cellophane frame. You remove this new amoeba—shaped precisely the same as the last one—with tweezers and stack it carefully on top of the first one. You keep going, until you’ve done this to all 100 frames.

You now have a multicolored amoeba extruded along its Y-axis. A tower of cellophane that could be run through a projector to show a small area of The Dark Knight, as if someone stuck their hand in a loose fist in front of the projector, letting only a little bit of the movie through.

This tower is then compressed and turn into what’s called a “patch”—a smear of color changing through time. The patch is the basic unit of Sora in the same way the “token” is the basic unit of GPT-4. Tokens are bits of words, while patches are bits of movies.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!

A well thought out post Dan! One area where I disagree with you is the assertion that Sora will not replace Hollywood movies. It looks like you assume Sora won't improve much beyond its current form, thus making it suitable for simply testing out movie concepts, which will then be picked up by interested studios. However, I think that a version or two down the line (less than 2 years away), Sora 2.0 or similar will be used by millions to create Hollywood quality movies that are visually compelling.

It is true that Youtube vloggers did not replace movies. I think that is because smartphone cameras and the Youtube platform does not replace good acting, script, dialogues, cinematography, music and everything else that goes into a good Hollywood production. I would argue that what Youtube vloggers did replace is reality TV, because to make a good reality TV series, all you need is a good camera and some family drama.

I think Sora 2.0, along with the future versions of Eleven Labs, Suno AI (or their competitors) and other AI companies will replace Hollywood in the very near future. I won't be surprised to see a Hollywood quality block buster production coming out in under 2 years that has a budget of under USD 2,000 and is completely made by less than 5 people. An alternate version of this would be personalised AI generated Hollywood quality movies created on the fly, just for you, based on your tastes and mood. All you need to do is input a prompt.