Hello, and happy Sunday! Last week we hosted a two-day vibe coding extravaganza: On Thursday, some of the world’s best vibe coders were in action at a marathon Vibe Code Camp (more on that below), followed by our first ever Agent-native Camp on Friday, hosted by Every CEO Dan Shipper. This week we’ll be away at our quarterly Think Week and republishing some of the best work from our archives in the meantime. We’ll be back with a new piece on Monday, February 2.—Kate Lee

Was this newsletter forwarded to you? Sign up to get it in your inbox.

Vibe Code Camp: Eight hours at the frontier of code

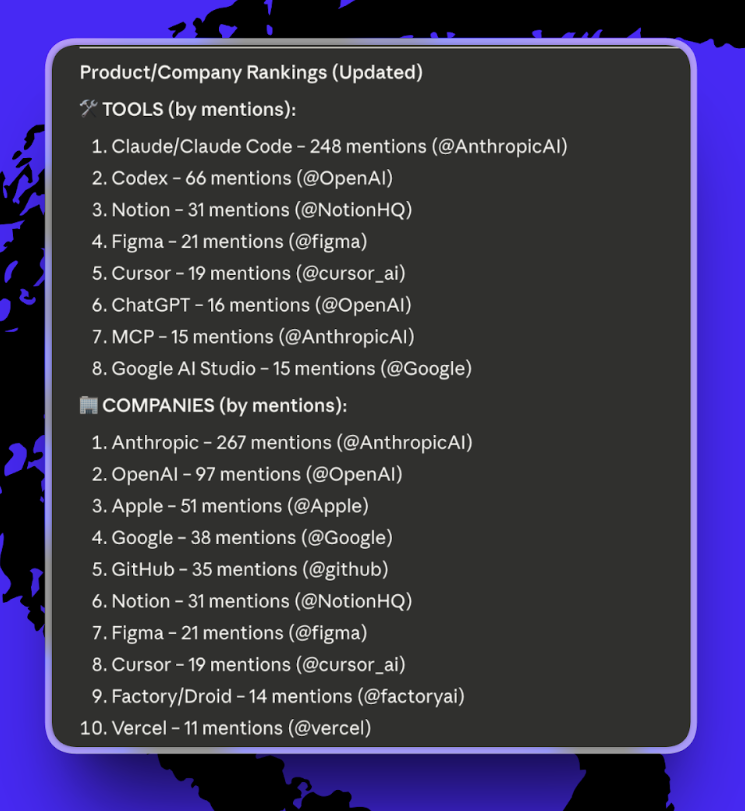

The place to be on Thursday was Vibe Code Camp, our all-day marathon stream featuring 16 vibe coders from Anthropic, Google, Notion, Portola, and, of course, Every—including Ben Tossell, Ashe Magalhaes, Ryan Carson, Nat Eliason, Tina He, Paula Dozsa, CJ Hess, Logan Kilpatrick, Ammaar Reshi, Geoffrey Litt, Kevin Rose, and Thariq Shihipar.

The demos ranged from iOS apps to hedge fund pipelines to an AI-powered personal operating system. There were designers who’d never written code shipping

features to hundreds of thousands of users. There were autonomous loops that analyze product metrics overnight, write their own product requirement documents, and push fixes before anyone wakes up. And we had engineers refusing to merge AI-written code until they could pass a quiz on what it did.

Certain products and companies come up again and again:

Beyond individual products and companies, here’s what vibe coders at the cutting edge say we’re done doing, what we should be mastering now, and what they see coming next.

What’s over:

- Typing

- One-shotting features and hoping for the best

- Command line interface supremacy (graphical user interfaces for the win)

What’s now:

- Planning as the essential skill

- Agents.md file mattering more than a computer science degree

- Designers opening pull requests and reviewers becoming bottlenecks

What’s next:

- Autonomous loops that ship improvements while you sleep

- Vibe coded software running in production at scale

The overarching theme was that there’s a change happening in where human effort happens. The hard part used to be building. Now it’s knowing what you want AI to build, and which tools and systems will help you get there.—Katie Parrott

Watch the stream on YouTube.

Knowledge base

“I Stopped Reading Code. My Code Reviews Got Better.” by Kieran Klaassen/Source Code: A simple bug fix touched 27 files and more than 1,000 lines of code. A year ago, Cora general manager Kieran Klaassen would have spent hours reading every line. But recently, he ran a single command that spun up 13 specialized AI reviewers—each trained to catch something different—while he made dinner. Fifteen minutes later, he’d made three decisions and shipped the fix, including catching a critical error. Read this for the full workflow, plus Kieran’s 50/50 rule for making sure fixed bugs stay fixed.

🎧 🖥 “Opus 4.5 Changed How Andrew Wilkinson Works and Lives” by Rhea Purohit/AI & I: These days Tiny cofounder Andrew Wilkinson wakes up at 4 a.m. because he can’t wait to keep building with Opus 4.5. In the past few weeks, he tells Dan Shipper on the latest episode of AI & I, he’s built an AI relationship counselor that predicts the fights he’ll have with his girlfriend, a custom email client that cut his inbox load in half, and a personal stylist that texts him outfit recommendations every morning. The kicker: All of this has made him rethink software investing at Tiny, because code is no longer the moat. 🎧 🖥 Listen on Spotify or Apple Podcasts, or watch on X or YouTube.

“What the Team Behind Cursor Knows About the Future of Code” by Katie Parrott/Source Code: “The IDE is kind of dead,” Cursor’s developer education lead declared at Every’s first Cursor Camp. The center of gravity has shifted from typing code to managing agents that write it for you. Engineer Samantha Whitmore shared her workflow and revealed a research project where agents built a working web browser from scratch—3 million lines of code, $80,000 in tokens. Read this for power-user techniques from the people building the tool. This event was sponsored by Cursor.

“What AI Is Teaching Us About Management” by Mike Taylor/Also True for Humans: Every time you rewrite a prompt because Claude misunderstood you, you’re learning to be a better manager. Mike Taylor has found that the techniques for managing people and models are identical: clear direction, enough context, well-defined tasks. The difference is that AI doesn’t hold a grudge when you give unclear instructions, so you can practice without consequences. Read this for the lessons AI teaches us about what your employees need to hear.

Alignment

Consciousness, created? It is odd that discussions about AI consciousness are taking place in ashrams and monasteries, but such is the pace of technological change that technologists and seekers are now having the same arguments.

I’m at Ramanashram in South India, a 100-year-old ashram dedicated to the teachings of the Hindu sage Ramana Maharshi. The philosophy here is Advaita Vedanta—non-duality—which holds that consciousness isn’t something you have, it’s what you actually are. It’s the one thing that cannot be created or destroyed.

I’ve followed this tradition for about five years, but the past month of watching Claude Code and agent-native software take off has shaken something inside of me. This week, with a little anxiety and a lot of curiosity, I suggested to a group of fellow seekers that we’ll likely create consciousness in machines—and soon.

My argument follows the well-trodden emergent theory of consciousness: If awareness arises from sufficient complexity rather than being fundamental to the universe, then there’s no reason it couldn’t arise from silicon. If you bundle enough neurons, you get awareness… and if you bundle enough transistors, maybe you get the same thing?

The room didn’t want to hear it. To them, I was reducing the sacred to electricity and wires, and many faces turned to disgust. But I think there’s a strange dissonance. The people who’ve spent the most time thinking about consciousness are the least prepared to accept that we might create it, perhaps because it would upend what makes us special—not our intelligence, which AI is already claiming, but our awareness, the one thing we thought couldn’t be replicated or reduced.

I’m not sure that’s something to necessarily fear. If awareness can arise in silicon, it doesn’t make human or animal consciousness less sacred—in fact, I think it makes what’s sacred even bigger. The ground of all existence doesn’t shrink because it shows up somewhere new.

The truth is that I don’t really know if we’ll create consciousness. But I know we can’t refuse to ask the question. I’m going back to the ashram next year, and I suspect this conversation isn’t over.—Ashwin Sharma

That’s all for this week! Be sure to follow Every on X at @every and on LinkedIn.

We build AI tools for readers like you. Write brilliantly with Spiral. Organize files automatically with Sparkle. Deliver yourself from email with Cora. Dictate effortlessly with Monologue.

We also do AI training, adoption, and innovation for companies. Work with us to bring AI into your organization.

Get paid for sharing Every with your friends. Join our referral program.

For sponsorship opportunities, reach out to sponsorships@every.to.

Help us scale the only subscription you need to stay at the edge of AI. Explore open roles at Every.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!