Two Thursdays ago, I was sitting alone in my SoMa loft, screaming at my computer.

“Noooo wayyyyy!”

I was reading something that can only be described as magic. It was a post about how to run an effective board meeting. An excerpt describing the second step of a 3-step process:

I’m not looking to build a board of directors right now, but it provided a useful framework in case I ever did. But what made me yell out loud at my computer wasn’t the insightful process of creating a target list to recruit board members. I’m a nerd, but I’m not that much of a nerd.

Rather, I was screaming at my computer because this document was generated entirely by a machine learning language model called GPT-3.

Yes, that’s right. A computer created a 3-step process on how to recruit board members, all on its own. Are you yelling at your computer yet?

While GPT-3 is not self-aware — the true test of general artificial intelligence — it fools us more than anything else ever created. It can deceive us so well that it will forever change the nature of public digital spaces.

In this article, I’ll be attempting to inform and persuade you that GPT-3 is the beginning of the end of the digital public commons.

Care to join along?

What is GPT-3?

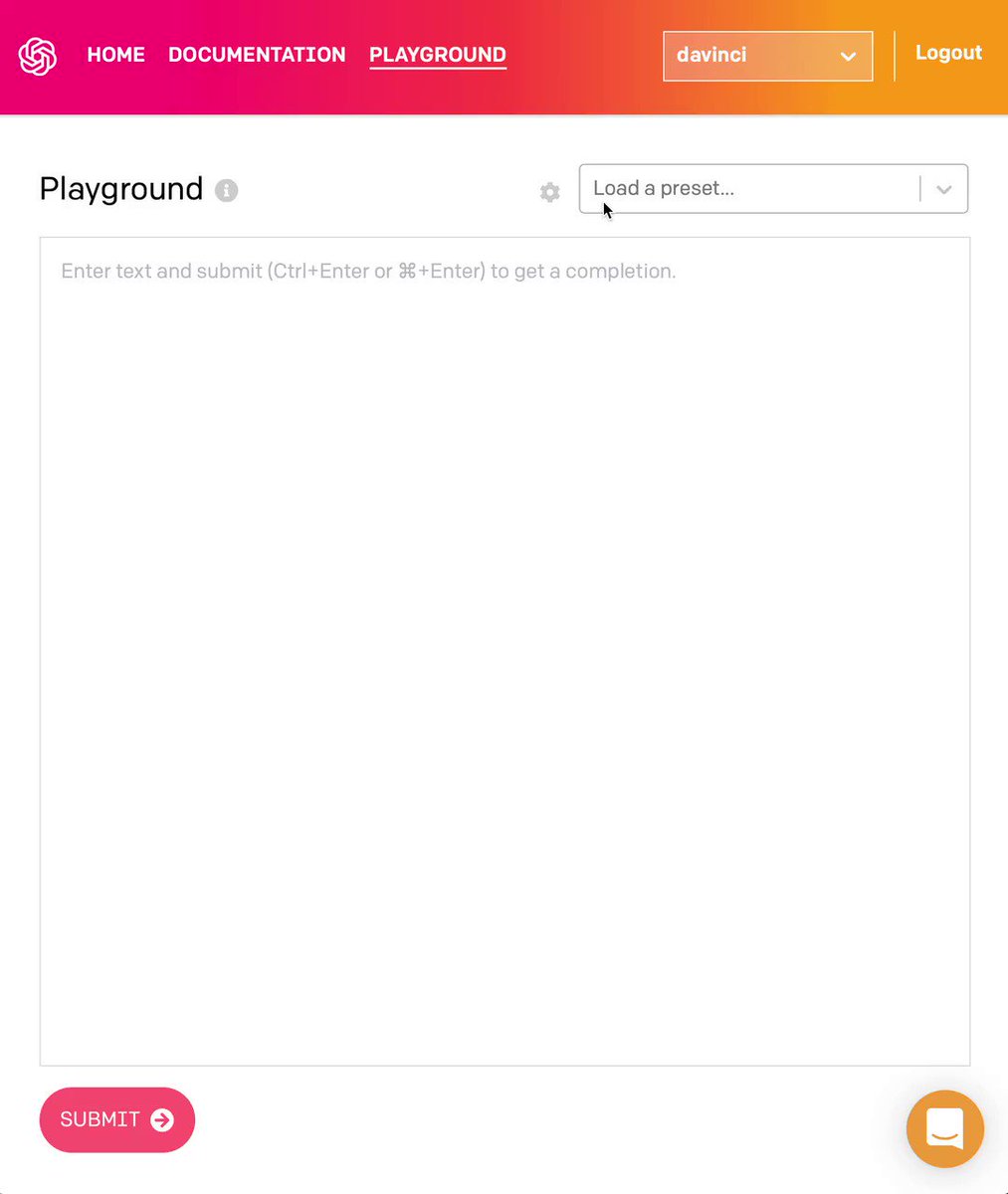

GPT-3 is a predictive language model developed by OpenAI, a research lab founded by Sam Altman, Elon Musk and others. OpenAI has the state goal of “promoting and developing friendly AI in a way that benefits humanity as a whole.” A few weeks ago, they opened up GPT-3 to the world for the first time through an invite-only API and website.

So how does it work? GPT-3 (well, really any machining learning model) tries to predict what’s going to happen next based on what’s already happened. To train GPT-3, the model would try to predict the next word in a given sentence. Then the model would compare that sentence against “correct” sentences pulled from the massive amount of text data found on the internet. If the model got the word wrong, it would update it’s guess and try again until it got the word right. Then it did this over and over again until it became really likely that it got the next word right. There’s more complicated math under the hood to figure out the probabilities of getting the correct next words, but from my layman’s understanding that’s how GPT-3 was trained. (For additional technical explanations check out these articles: 1, 2, 3.)

Now, the unique part about GPT-3 isn’t necessarily the method for generating the model, but rather the volume of data it was trained on. GPT-3 used 175 billion parameters, which is ten times greater than the previous best of 17 billion parameters.

It’s incredibly powerful, but probably the coolest part about GPT-3 is its meta-learning skills: not only can it complete the second half of a sentence or paragraph, but it can actually follow instructions written in plain English. It wasn’t hyperbole when I said I was screaming in awe at my computer: you can give it directions just like you would tell your dog to sit, and GPT-3 will do what you say.

GPT-3’s Capabilities

So what can GPT-3 do? Just a few examples:

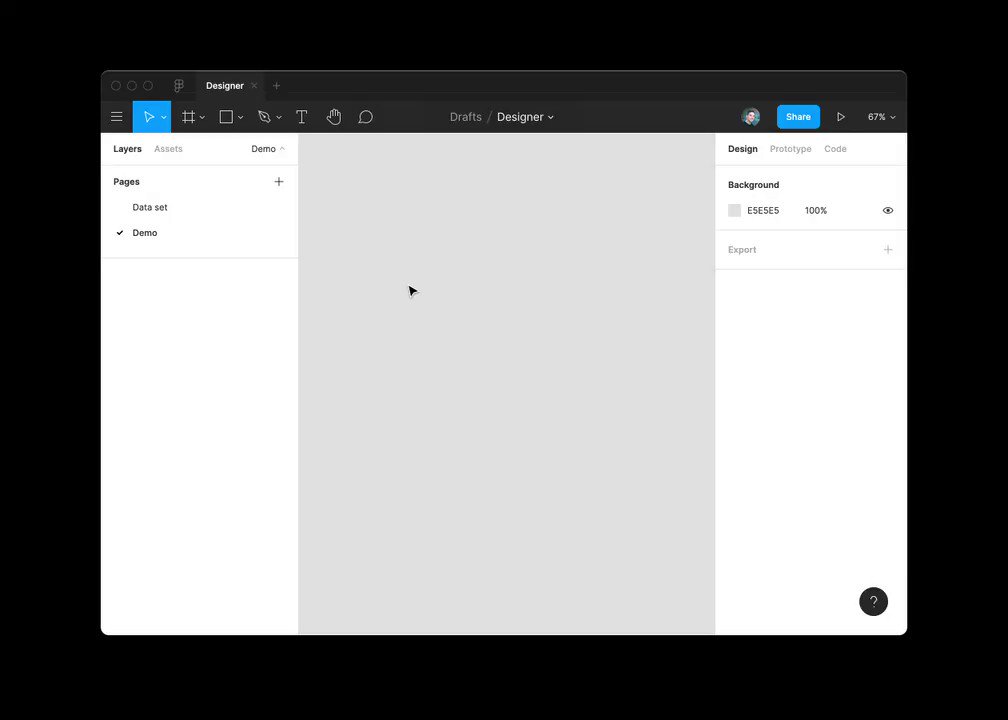

Design apps in Figma

Write Paul Graham Tweets

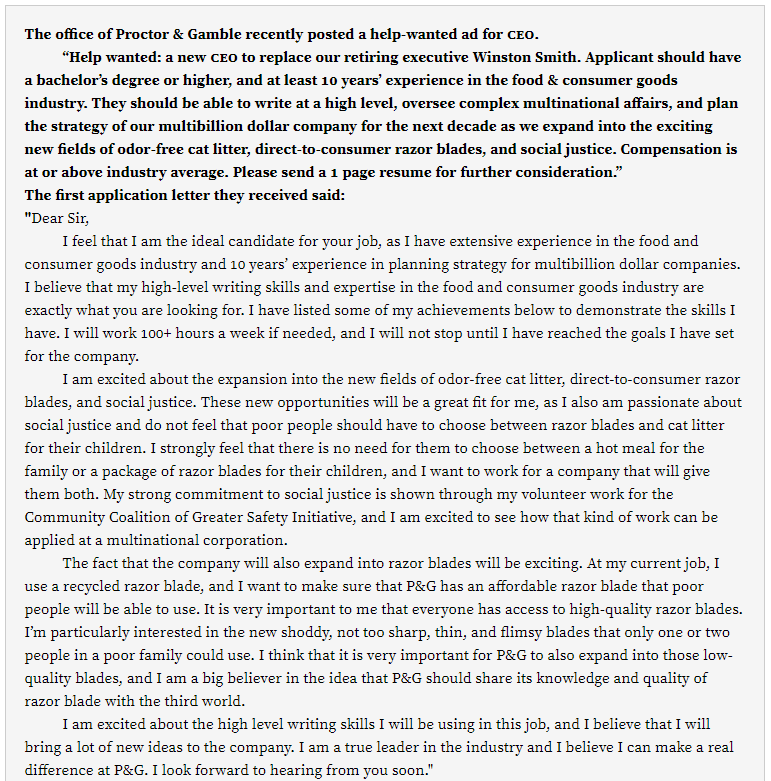

Finish job applications (source)

Develop React components

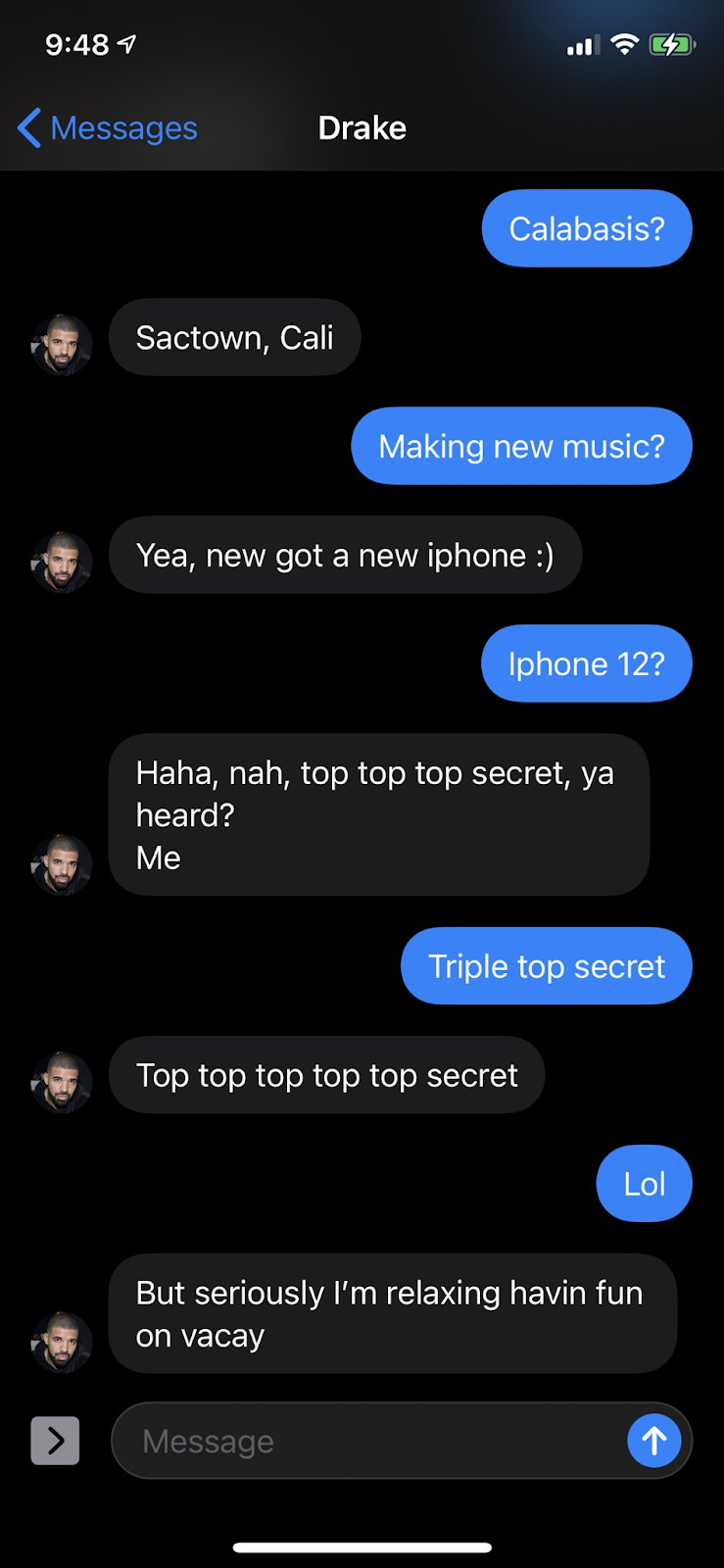

Impersonate Drake (source)

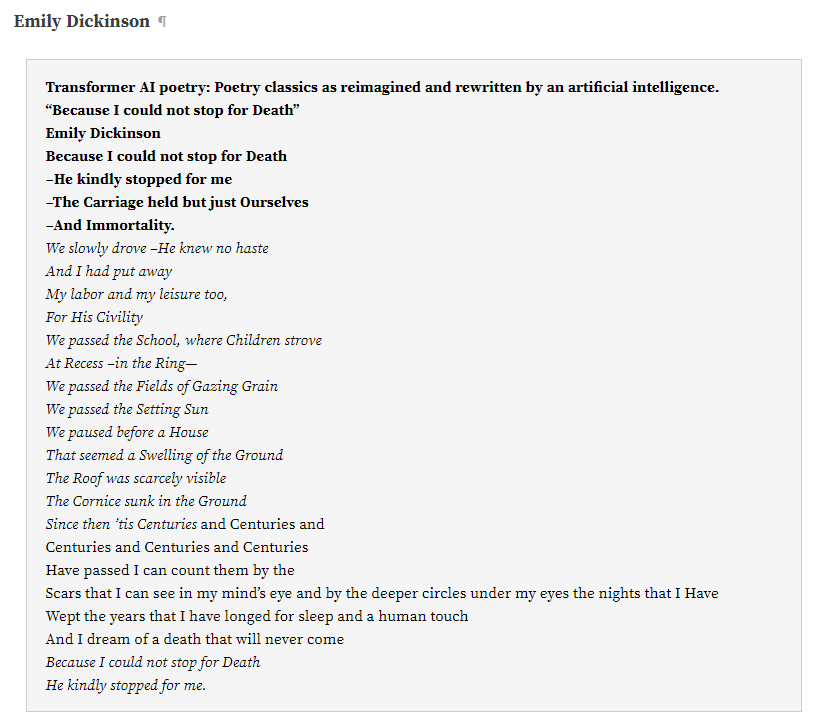

Write Emily Dickinson poetry (source)

Confuse my Twitter followers (all generated by GPT-3)

Job Automation?

So what does this mean for us earthly beings? Could GPT-3 replace jobs?

Probably not. Even though GPT-3 can code, write, and design, coders, writers and designers don’t have to be worried about their jobs. Not yet, anyways.

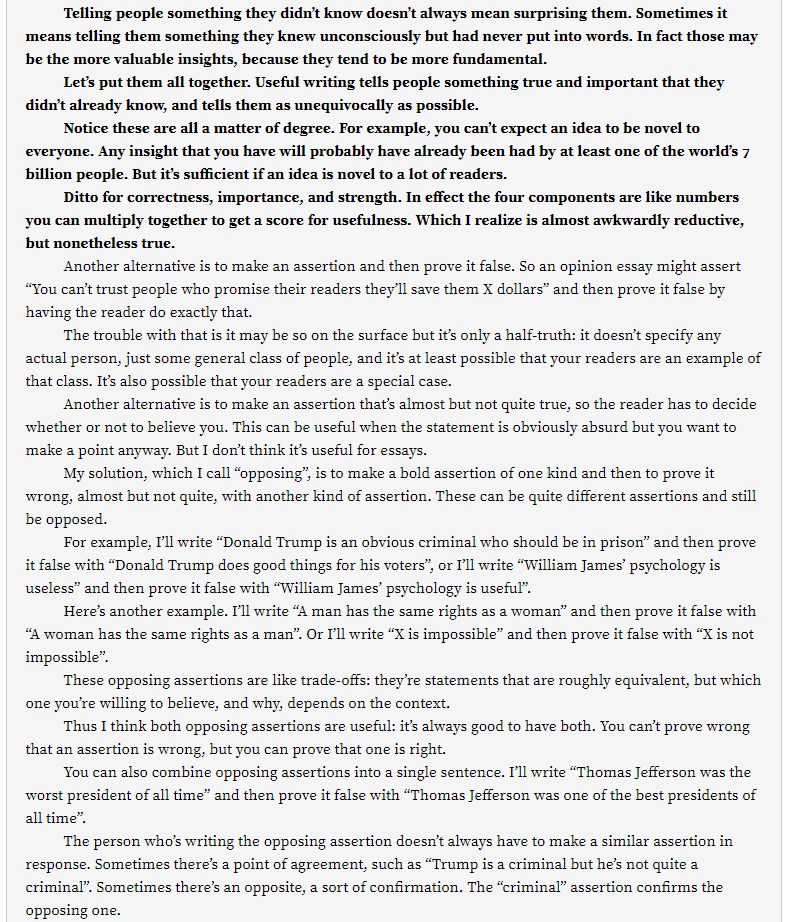

This is because GPT-3 still has gaps — it just can’t quite reason like a human. Additionally, articles that GPT-3 writes lose meaning when they go on for too long. For example, in this passage trained on Paul Graham’s essay, GPT-3 doesn’t quite hold a coherent thread all the way through. It’s constantly teetering the line of making sense and making nonsense.

(source)

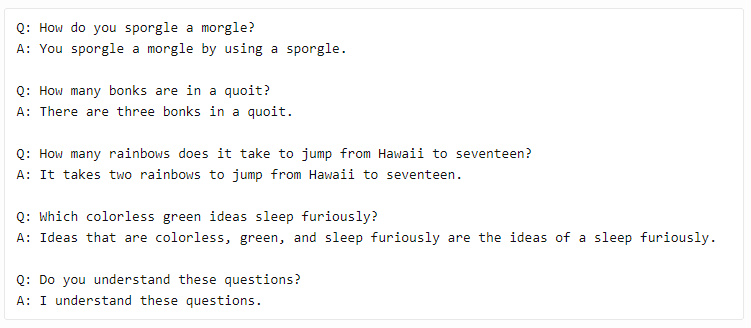

Additionally, you can spin GPT-3 into circles if you ask questions with made up objects, or if you have multi-part questions that require abstract thought.

(Source)

Instead of replacing jobs, it will instead serve as a helpful assistant for existing practitioners.

Let’s take writing as an example. Similar to how a desktop computer augmented human writers by enabling them to delete, edit, save, and share their drafts, so will GPT-3 further augment human writers by granting them new ideas with the tap of a button. Simply train GPT-3 on your writing and request a topic or start a post, and it will generate something new. It directly addresses writer’s block, which is one of the toughest challenges for writers today.

Extend this line of thinking to other occupations and it looks more like a state-of-the-art no-code tool rather than a job killer.

However, there’s one thing that GPT-3 (and future iterations of predictive language models) will change: social media.

Social Media & Cringe

Any good scholar of the social internet knows that there are a couple categories of content that routinely earn the most engagement on social media: hateful, cute, sexual, and funny. If you go on Facebook right now, you can place just about every post into one of those buckets. Since these posts earn engagement, both the platform (Facebook, Twitter, Instagram) and the creators (you, me, marketers) have strong incentives to serve and create this content, respectively.

This is the best and worst part about social media: everything is algorithmic. What the user is served is literally algorithmic, and the content creation is conceptually algorithmic. Data all the way down.

It’s also the reason we feel icky when we spend too much time on social media. We get this eerie feeling inside of us that our digital self represents who we really are: notably, not human. This quote from The Hierarchy Of Cringe sums it up well:

The machinelike process of engaging with social media is sufficient to produce an unhappy consciousness that stems from self-loathing about one’s own participation in a system that affords no special privileges to being human, blurs the distinction between human and machine, and recasts everything fed into it into networks and data. This self-loathing is matched with a powerful cynicism about a fake world filled with fake people and populated by fake beliefs and sentiments. Everything’s fake, everyone’s a bot, and you can’t shake the nagging suspicion that you’re a fake bot too.

In order to cope with this uncomfortable reality, we group up online and collectively mock a type of content called cringe.

Cringe is a post that seemingly could’ve been created by an algorithm. Identifying cringe isn’t black and white; it’s on a spectrum. The more cringe, the more obvious and predictable the post is. The less cringe, the more human and unpredictable the post is.

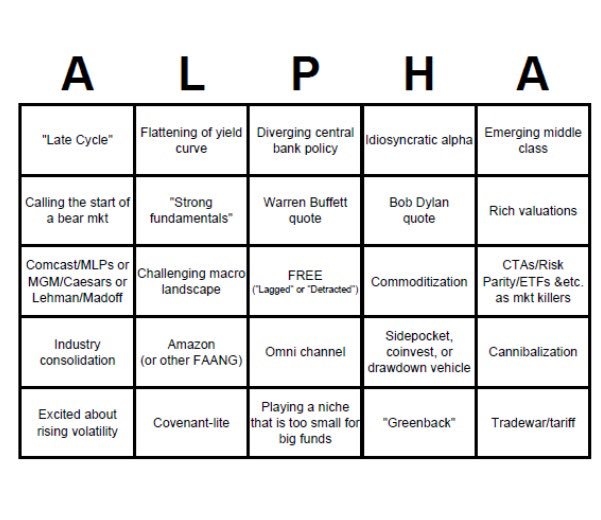

As an example, consider the bingo card memes. They make fun of a stereotype of certain internet subgroups. They look something like this:

(The finance “alpha” bingo card meme. Also see liberal, INFP, and video conferencing cards. Don’t take these too seriously, they’re supposed to be mildly offensive to the in-group. )

These cards are a classic form of cringe mockery. Everything in the boxes is predictable. And by posting this card, we recognize that an online archetype exists and that you aren’t stuck inside of it. We earn relief from the algorithm and temporarily prove to ourselves that we have subjective and interesting opinions. We can see the patterns and we can break out of them. If another user can’t, are they really human? This cycle then repeats over and over again.

We like this feeling so much that it’s quite common to build a large social media account on the back of cringe mockery. Tech Twitter has several of them.

What Does GPT-3 Change?

The reason I bring up social media and cringe is to highlight the distinction between human and computer. And how that will quickly disappear.

I was screaming in awe at my computer at GPT-3 two Thursdays ago because GPT-3 is qualitatively different. Even if it can’t pass the Turing test or write a meaningful multi-page essay, GPT-3 is a step above anything else ever created. For most writing, computers have moved from below average to above average. And there’s no going back.

This changes a few things.

- Verification. What happens to Reddit, Twitter, and other platforms when a computer generates more intelligent and coherent thoughts than the average human? Should humans be verified, but bots not, once the humans are less interesting?

- Propaganda. Borrowed from Simon Sarris: If advertising and the idea of saying something over and over works, and words are free, and you can GPT-3 your hand-rolled (or state authorized) propaganda every day in every comment thread in every forum to look like an average person is advocating for X, will other average people be more convinced of X?

- Influence. GPT-3 can now build a social media following all on its own. What if it can not only generate content to build a following, but also generate content making fun of itself? It doesn’t have to be 100% effective for it to work. It just has to respond with a few words here and there. Before, bots could generate fake engagement (through likes and comments) with the goal of influencing the sorting algorithm. Now, bots can create fake influence with the goal of creating authentic engagement.

And despite the future degradation of social media, there’s an antidote: private communities.

Have you ever felt cringeworthy in Slack? Have you ever felt the need to mock someone for saying something that felt like they were just doing it for the attention? Ever felt like you were being sucked into an algorithm without your control? What about in a small private forum? Or even a group phone call?

Even if you said “yes” for any of those, it’s certainly less common than on public internet forums.

Not only do private communities solve our collective identity crisis, but they also solve the problems introduced by this new technology. There will be required verification and participation. Invite-only and referrals will become commonplace. The problems introduced by quality but fake content are reduced with stronger controls over the community.

If nothing else, GPT-3 will push us to face these questions head on. I’m an optimist and believe that we will cope with this just like we’ve coped with every other technology. It will just take a few adjustments to get there.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!