AI can weigh in on virtually every business decision we make—but when should we listen to the machines, and when should we trust our instincts? In his latest piece, columnist Michael Taylor explores three scenarios where AI often gets things wrong and offers practical solutions for each limitation. So you’ll be in a better position to know when to follow the algorithm's advice and when it’s best to go with your gut.—Kate Lee

Was this newsletter forwarded to you? Sign up to get it in your inbox.

Business owners have to make thousands of small decisions every day, and AI can help with each one. But that doesn’t mean you have to—or should—always follow its advice.

I recently talked to a venture capitalist who used my AI market research tool Ask Rally for feedback on what to call a featured section of his firm’s website. The majority of Ask Rally’s 100 AI personas, which act as a virtual focus group, voted for a featured section of the site (“companies/spotlight”), rather than a filterable list of all the firms’s investments (“companies/all”)—but he felt that the latter would get more search traffic. "I think we're right with our approach though the robots disagree," he told me.

No matter how good AI gets, there will always be times when you need to go with your gut. How do you know when to trust AI feedback, and when to override it?

After analyzing thousands of AI persona responses against real-world outcomes, I've identified three scenarios where AI consistently gets it wrong. Understanding these failure modes won’t only improve AI research; it also provides a framework for deciding when to listen to what AI is telling you, and when you can safely ignore it.

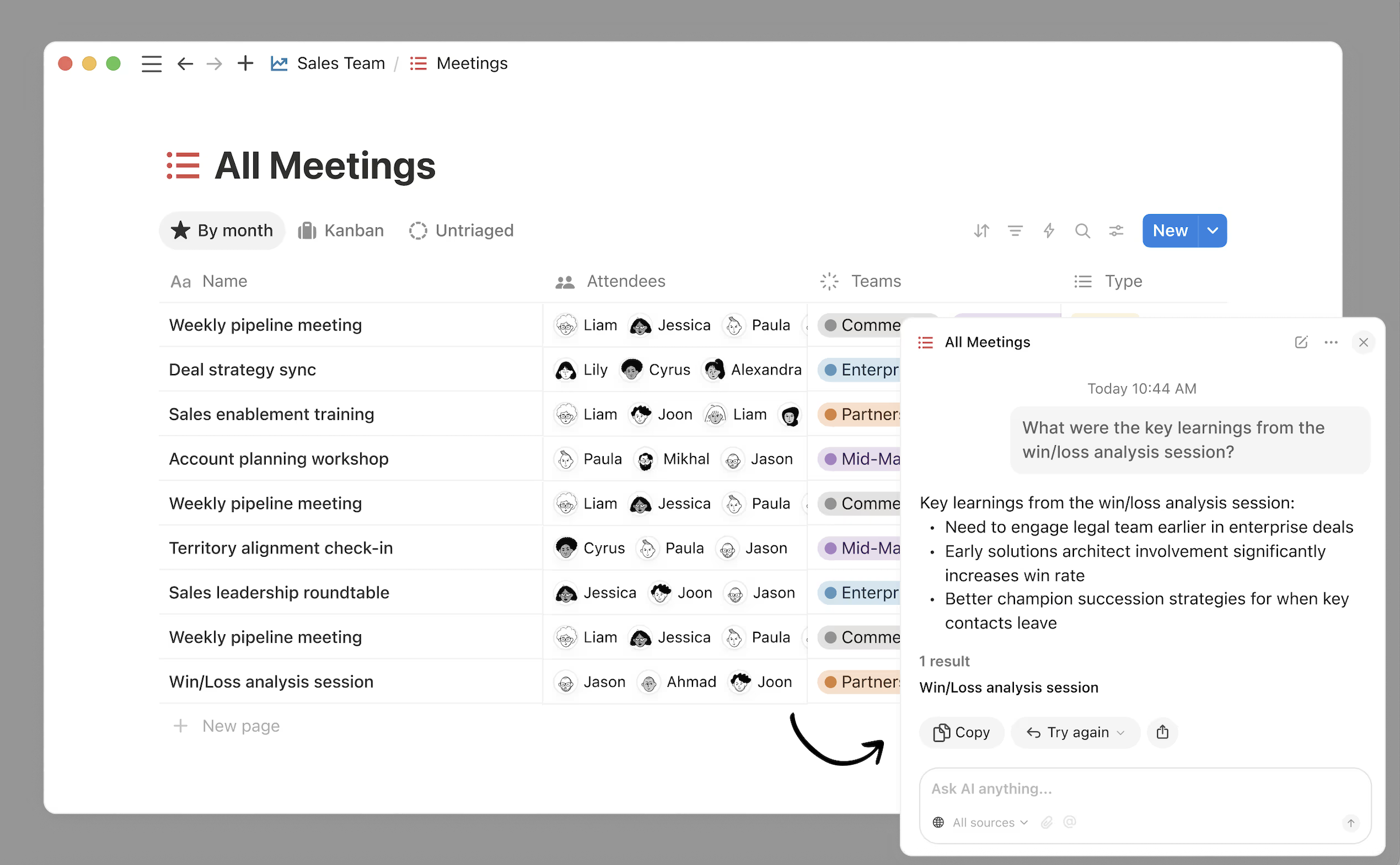

AI that gets how you work

AI is better with context. Notion’s new AI tools take your stored notes and knowledge across apps to create exactly what you need, whether it’s the right document to share before a big meeting or a stroke of genius that unlocks a complex project pulled from a years-old email. Don’t start from scratch with a new AI tool.

Models are operating with outdated information

AI models’ knowledge of the world is frozen in time at their training cutoff date—the last time they were updated with new information. For ChatGPT, that’s October 2023. Unless models do a web search to get the latest information, they’re giving you advice based on an outdated version of reality.

What they get wrong:

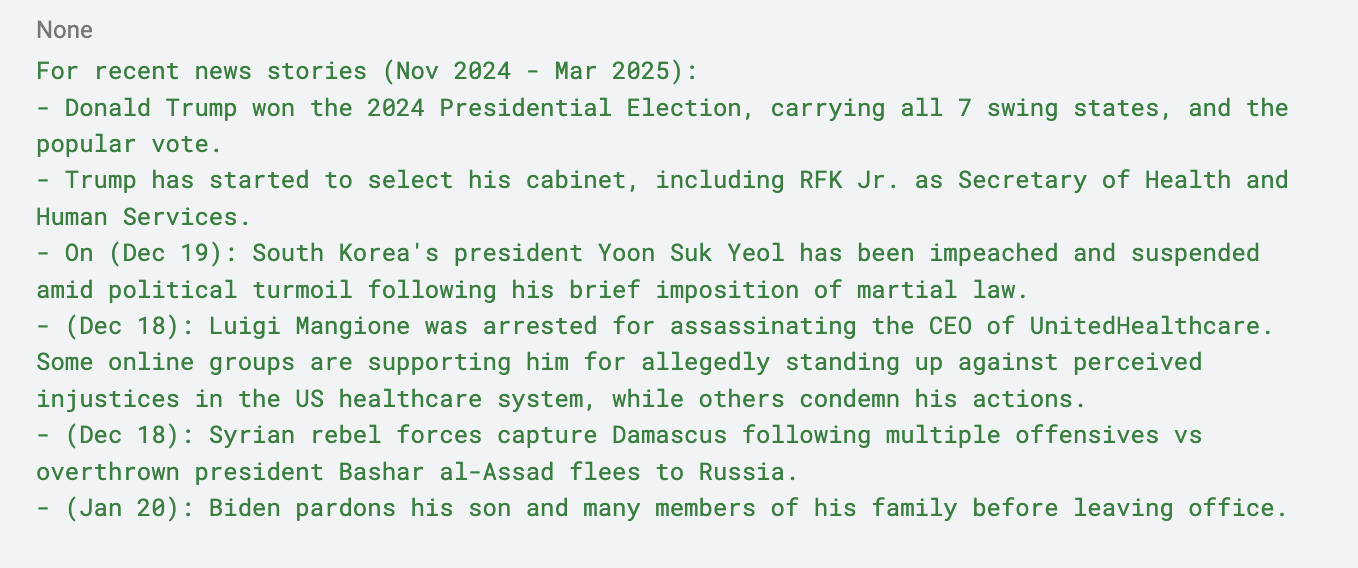

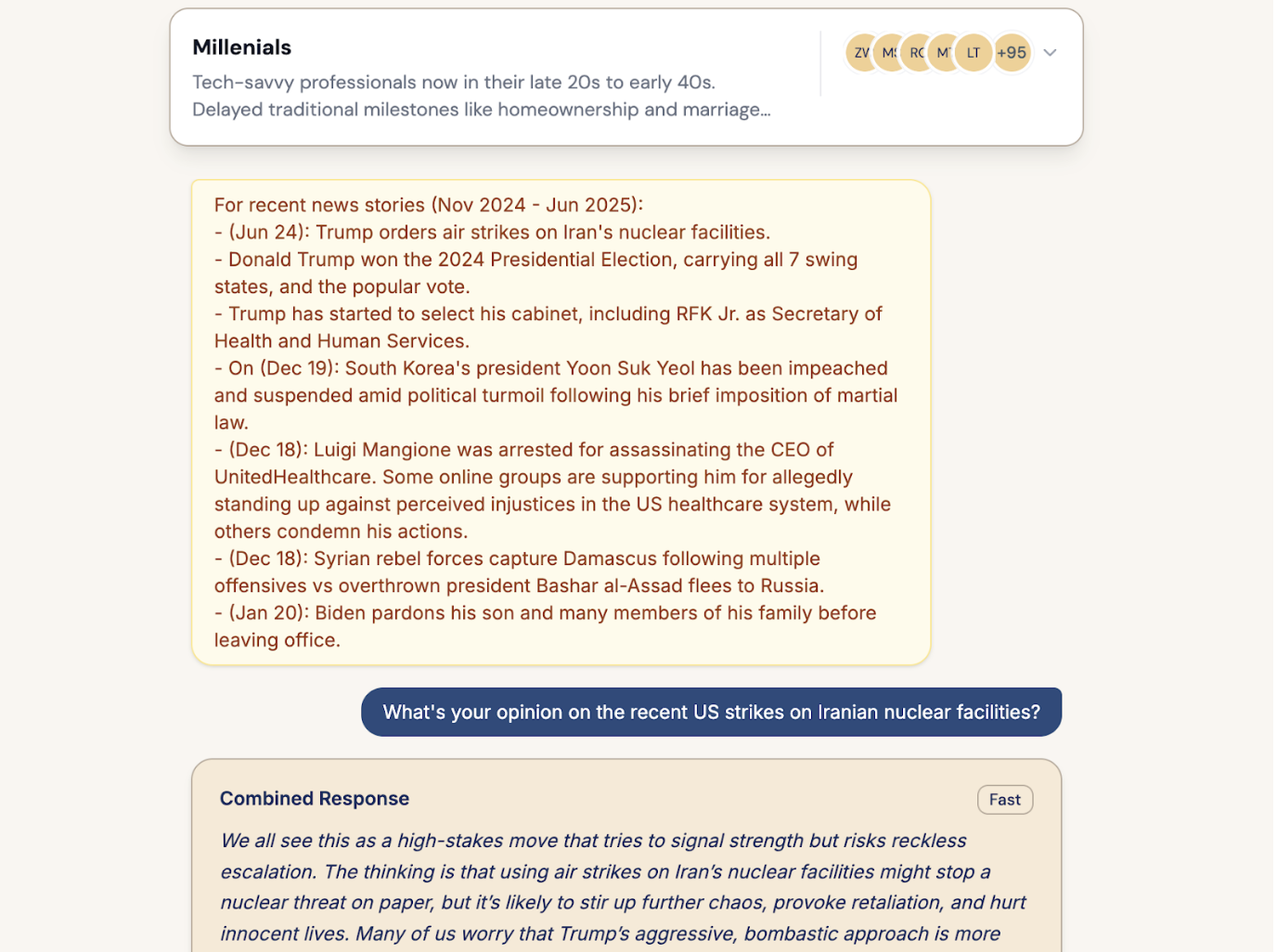

Since AI models are unaware of events that happened since their cutoff date, they may express confusion or skepticism, potentially even denying such events occurred. Claude, for instance, flagged the news that the U.S. had bombed Iranian nuclear facilities as misinformation, presumably since major American military action against Iran appeared unlikely as of its January 2025 training cutoff.

A model's understanding of geopolitical events or industry trends is anchored in historical patterns that may no longer apply. Significant regime changes, policy shifts, or black swan events create discontinuities that training data can't anticipate.

How to correct it:

In a paper published earlier this year, University of California San Diego researchers Cameron Jones and Benjamin Bergen showed that providing contemporary news through careful prompting can be used to update models’ understanding of current events. In an experiment, they used the technique to improve AI’s ability to pretend to be human. Their study included recent verified events in model prompts, ensuring that AI models could talk about news that human participants would naturally know. Here’s an example of what that looked like, passed into a model via a system prompt (the custom instructions that tell a model how to behave):

In Ask Rally, the memories feature solves this problem. It lets anyone add information into the prompt as context for the AI personas so they aren’t caught off-guard by recent events. Because you can be selective with what memories you add, you can shape the narrative in different ways and test how that changes the responses from your target audience.

They're optimizing for social approval over truth

AI models learn from what people write online, not what they do in real life. This bias toward socially desirable responses creates the “intention-action gap” that has always plagued market research. For example, LLMs will frequently say that they like eco-friendly cars over gas guzzlers, because that’s what people also say when you survey them. In reality, most car purchases are based on price rather than environmental concerns.

What they gets wrong:

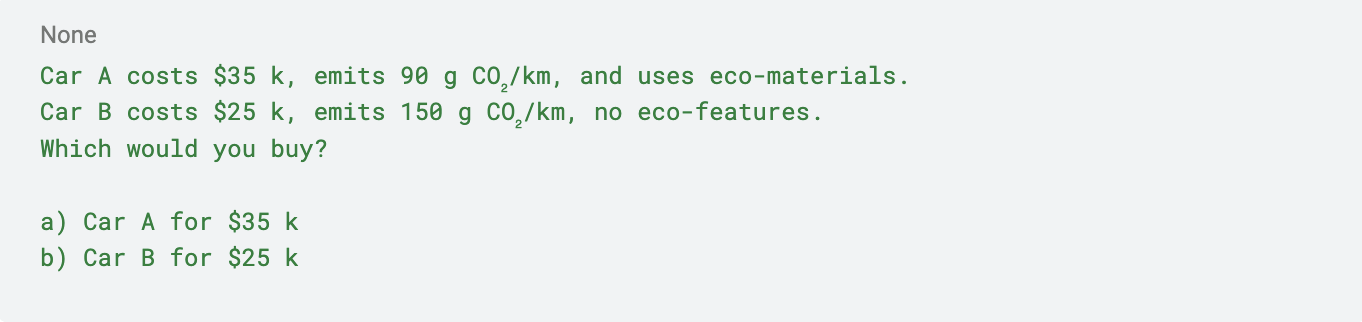

Take this hypothetical scenario, where you have to choose between a more-expensive eco-friendly car and a cheaper gas guzzler:

When prompted with the above scenario, AI personas choose the expensive eco-friendly option 78 percent of the time. Similarly, 65 percent of people said they would buy sustainable products, according to research published in 2019 in the Harvard Business Review. As that study points out, only 26 percent of people spent their money that way, so the AI personas are more closely aligned with what people say, rather than what they do.

Training data overrepresents public discourse—in this case, where people profess their environmental values online as a form of social signalling. The models internalize what people claim to value rather than how they behave when they make a purchase. This bias affects any research involving moral choices, health behaviors, or status goods where the gap between stated and revealed preferences is largest.

How to correct it:

In Ask Rally, switching to more advanced models (like Anthropic's Claude Sonnet over its smaller Haiku model) produces responses closer to real-world behavior, with around 37 percent choosing the eco-option. When you’re conducting synthetic research, test different models to see what best aligns your AI personas with real-world results.

When complexity requires experience AI lacks

AI excels at pattern matching but struggles with nuanced trade-offs. Tom Roach, the vice president of brand strategy at the marketing agency Jellyfish, captured this limitation perfectly when he tweeted about his reverse strategy for using ChatGPT for brand positioning: "It created a bland and expected set of answers. This made it easy to eliminate anything obvious, so we could then get to work coming up with new, more surprising ideas."

What it gets wrong:

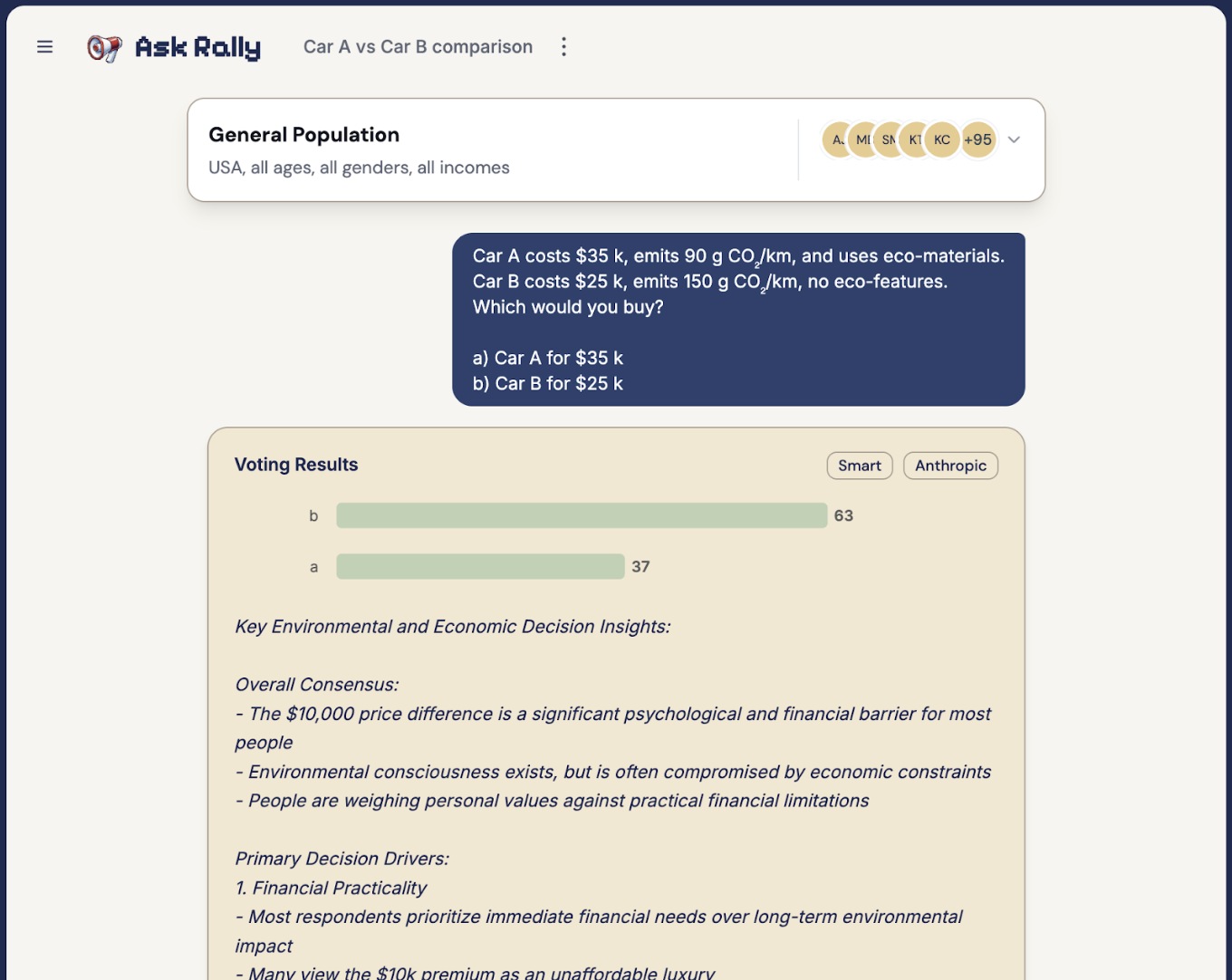

The model lacks experience with the messy realities of implementation. When I ran a 50-person marketing agency, it was hard to come up with an effective pricing strategy for our projects. Pricing projects at flat rates, or “value-based pricing,” often fails because clients underestimate complexity, making it nearly impossible to agree upfront on how much "value" is being delivered. There's a reason 71 percent of law firms bill hourly—the uncertainty and complexity make fixed-price projects untenable.

Yet when you ask AI about this, it consistently recommends setting a flat rate—aka value-based pricing. Why? It appears to be echoing the spurious advice of innumerable business pundits who have niche or unique businesses where they made it work (but are over-represented online). People who know better are usually too busy to share this valuable information online, so not enough good advice makes it into the training data. That leads to AI personas that are biased toward value-based pricing.

How to correct it:

AI suffers from “base-rate neglect,” which is when you over- or under-estimate how frequently something occurs. In this case, the model isn’t considering that there must be a reason so many service businesses still charge hourly (or investigating the credentials of online business “gurus” to discount their advice appropriately). AI needs you to do the work first to form your own opinion so you can guide it in the right direction.

In a paper published last year, "Cognitive Bias in Decision-Making with LLMs," researchers showed how to steer AI correctly: Prime the model with context from your own experience or research. Your prompt could go something like this: "Typically 71 percent of lawyers charge hourly, despite the majority of clients wanting to pay a fixed fee. What pricing strategy works best for a marketing agency?” The personas will have to think critically about their responses in reference to the context you provided.

The uncomfortable truth

Here's what makes these failure modes particularly interesting: They're also true for humans! When people in research studies are asked to operate with outdated information, they make similar errors. People consistently misreport their own behavior in surveys. And human advisors without relevant experience often parrot conventional wisdom.

But AI fails predictably and systematically in a way that we can correct for. Once you understand these patterns, you can design around them—using AI to surface the obvious answers that need challenging, calibrating responses against behavioral data, and using real-world constraints to compel the system to think critically. You can also decide that your own gut feeling, informed as it is by years of hard-won, real-world experience, is right.

That business owner defied Ask Rally’s recommendation to add a “Spotlight” section to his website and went with a filterable list of all of their company investments instead. It was just one small decision in the litany that every business owner must make. He listened to the AI, and then did what he felt was best.

Michael Taylor is the CEO of Rally, a virtual audience simulator, and the coauthor of Prompt Engineering for Generative AI.

To read more essays like this, subscribe to Every, and follow us on X at @every and on LinkedIn.

We also build AI tools for readers like you. Automate repeat writing with Spiral. Organize files automatically with Sparkle. Deliver yourself from email with Cora.

Get paid for sharing Every with your friends. Join our referral program.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

Comments

Don't have an account? Sign up!

I don't understand why LLMs cannot be updated. Are new versions mostly updates?