Was this newsletter forwarded to you? Sign up to get it in your inbox.

Imagine a neighborhood diner: chrome-edged counters, white enamel mugs, phonebook-thick laminated menus, and, of course, cakes posing in the stage lights of the glass display case near the front.

You bounce in at 7 a.m., fresh from a run, and settle down in a red pleather booth that goes fwhump when you sit. A waiter passes by with a pot of hot coffee—he catches your eye and pours a mugful. For one clean second, the universe purrs.

Mid-menu, your eyes drift back to the cake case: Inside sits a triple‑layer chocolate erupting fudge like lava and a lemon meringue dome wobbling slightly—the dessert world’s seismograph.

“Egg-white omelet,” you say to your waiter. But a slice of the meringue shows up on the table before you finish talking.

“I saw you looking,” says the waiter with a wink and a bow.

It’s got egg whites at least. And hey, fruit! you think. Before you can pick up your fork, three slices of the triple-layer chocolate cake clatter onto the table, spilling the meringue into your lap.

“I ordered an omelet!” you yell, pointing at the menu. But you notice it has changed—the omelets have vanished, the salads have disappeared, even the coffee is gone. The menu has transformed into an endless variety of cake: red velvets, Boston creams, and strawberry shortcakes.

“Here, we only serve what you really want,” the waiter says.

You try to scream, but you can’t. Your mouth is full of cake.

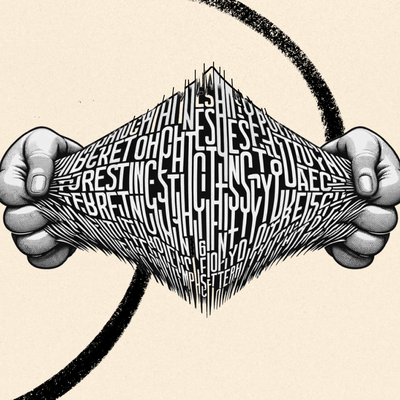

Social media: A diner that serves you what you look at

For 15 years, the internet has been this diner. Social media served whatever our gaze grazed and our fingers clicked—what we call revealed preference—because that’s all the intent it could discern.

In many ways, this was great. We went from a one-size-fits-all media ecosystem to Khan Academy math videos, the ALS ice bucket challenge, BookTok, and this publication. Every would not exist without recommendation algorithms and social media. Instead I’d be pushing pencils at IBM and writing unpublished short fiction on the weekends in my gray little monoculture reality.

And yet, in many ways, this was not great. We tend to click on stuff that makes us outraged or horny. This turned social media into a cornucopia of empty calories: filter bubbles, flamewars, and doom-scrolling. We can’t stop eating, but we feel nauseous and ashamed at the end of the meal.

We thought this was the only way. We called our clicks revealed preferences because they’re supposed to be what we actually want, not just what we say we want. We began to view ourselves in this reduced form, just a clump of base desires masquerading as a well-intentioned human being.

But AI is different.

LTX Studio just dropped Veo 2 integration…

LTX Studio has officially launched Veo-2, and it’s making high-quality AI video more accessible than ever. Now available at an industry-leading rate of just $0.68 per Veo 2 generation, LTX is offering a powerful alternative to more costly platforms.

As part of the launch, LTX is also offering up to $300 in bonus Veo credits when you choose an annual plan.

With this launch offer, the price gets even lower: just $0.34 for an 8-second video.

Making it by far the most affordable option around.

AI: A diner where a genius takes your order

LLMs don’t stalk your side‑eye; they listen to what you say. In AI, stated preference suddenly outranks reflex. LLMs believe what you say, not just what you click.

That single constraint tilts the table. Now your sentence—“Help me stop doom‑scrolling”—is an important KPI, not just how long you kept thumbing the timeline. One line—“Give me Gaza updates without partisan dunks, under 200 words”—carries more signal than 100 rage‑clicks.

In a diner run by AI, your waiter is familiar with the sum total of all of human knowledge, he gives you what you say you want, and—weirdly—he can sometimes fix the long-standing back pain or jaw clicking that every doctor you went to shrugged their shoulders at.

There is even an entire subfield of AI, alignment, whose job it is to make the AI do your bidding, instead of getting you to click on ads. After all, the industry started with safety and ethics in mind: OpenAI’s first major paper was a 29-page safety memo.

You can argue with whether this focus has been successful—and there is always room for improvement—but I doubt you’d find anything like that on Mark Zuckerberg’s laptop while he was at Harvard building Facebook.

Of course, AI can still help you feed your base desires—but at a minimum, it sees a much more complex version of who you are than the old-school social media algorithms that saw you through a keyhole.

And this may be its greatest achievement. Technology changes how we see ourselves. In a world where algorithms can only see clicks and watch time, we thought we were a degenerate bunch.

Now that algorithms can talk to us, we see a much richer reflection in the mirror.

Dan Shipper is the cofounder and CEO of Every, where he writes the Chain of Thought column and hosts the podcast AI & I. You can follow him on X at @danshipper and on LinkedIn, and Every on X at @every and on LinkedIn.

We build AI tools for readers like you. Automate repeat writing with Spiral. Organize files automatically with Sparkle. Write something great with Lex. Deliver yourself from email with Cora.

We also do AI training, adoption, and innovation for companies. Work with us to bring AI into your organization.

Get paid for sharing Every with your friends. Join our referral program.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!

Taking explicit preference into account was always possible, media companies (social and otherwise) just chose not to because it was more profitable to orient to the extremes and play our limbic systems like a fiddle. Remember the glory days of the internet before it was taken over by big companies and advertising? That's AI right now: we just haven't figured out how to best insert ads and other monetization into it yet. The flat monthly fees are working for now, but certainly 1000s of "innovators" are working on how they can wring more money out of AI users as we speak.

If we don't get to AGI first, we're very likely to just get a repeat of the commodification of our attention we already have outside of AI that you describe in such damning terms. Only with AI it could be supercharged to be even more effective at holding our attention because it understands us better, at least in some respect, for some definition of "understand". Imagine custom advertising that knows you well enough to manipulate *you specifically*, in direct ways customized to what it knows about you, e.g. "Don't let little Jimmy sleep without a custom plushy tonight, his nightmares have been getting worse!". Dystopian but plausible. I see little reason for optimism that capitalism will drive AI companies in a different way than it did for social media and the internet economy at large.

Also isn't AI Alignment largely about avoiding true harms, like helping terrorists build bombs, or helping hackers and scammers, or causing mental health issues, etc? I don't think much of that research and intent is oriented toward "Don't put profit over user preference". Though I wish it were!

Enlightening and a delight to read. Thank you, Dan.

Such a great analogy, Dan — it really brings to light the dynamic we're all caught in. We don't intend to be drawn toward the wreck on the side of the road, but attention gets hijacked before we even realize it. Social media has been engineered around that impulse — the irresistible glance — instead of genuine intent.

If AI can help recalibrate us back toward what we actually want to build, learn, and become... that's a game-changer. I think you're tapping into something essential here — the shift we've been needing.

Lovely analogy and I enjoyed reading it a lot. Great choice of words that made it feel like I was right there, especially at the start of the essay.

That said, I feel like there is a slight shortcoming in the logic. The argument goes from social media as revealed preference to AI which takes in our stated preference. This argument works if we were all not on social media anymore and instead mainly used AI chatbots for everything.

The truth, from my point of view, is that we say "AI is so much better at understanding what I want than social", yet we will still continue using social – despite the fact that it makes us feel outraged and envious.

AI reflects our higher selves. Social media panders to our lower selves. Both are now options and we often choose the latter, even when we know it's worse.