Was this newsletter forwarded to you? Sign up to get it in your inbox.

Here’s a question: Are we officially in the part of the movie where human experts lose their livelihoods and we realize we’ve been training our replacements the whole time?

I ask because the current rate of AI progress is both exciting and unsettling.

GPT-5 Pro has begun to cross boundaries that, until recently, felt securely human. This month, it solved Yu Tsumura’s 554th problem—a notoriously tricky exercise in abstract algebra that every major model before it had failed—producing a clean proof in 15 minutes. A week later, the noted quantum computing researcher Scott Aaronson credited GPT-5 with providing a key technical step in a proof he was working on.

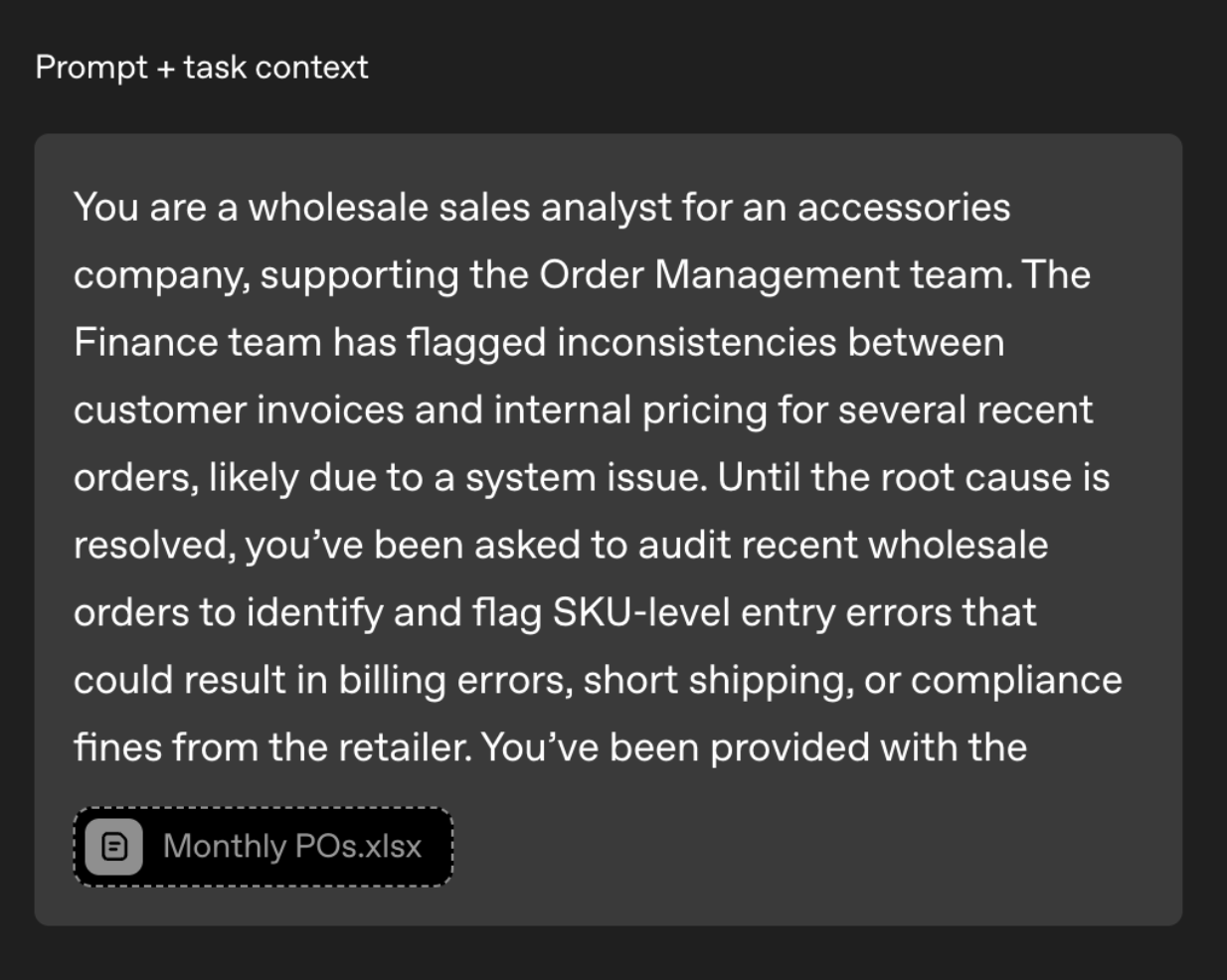

OpenAI recently came out with a benchmark called GDPval, which evaluates how well AI performs real expert-level tasks drawn from 44 different occupations. For instance, one asks the model to play the role of a wholesale sales analyst: It needs to audit an Excel file of customer orders to find pricing mismatches and packaging errors, and summarize the findings and recommendations in a short report.

Overall, the research showed that GPT-5 was as good as or better than human professionals 40.6 percent of the time. Claude Opus 4.1, meanwhile, was better than human experts a whopping 49 percent of the time.

Cue a slew of headlines like, “OpenAI tool shows AI catching up to human work” from Axios, or, “AI models are already as good as experts at half of tasks, new OpenAI benchmark GDPval suggests” from Fortune.

I am a huge AI bull, but if read correctly, both of these examples show that there is more work for humans to do with AI, not less. That’s because there is an immense amount of smuggled intelligence—the hidden layer of human judgment, feedback, and prompting—that makes these achievements possible.

Make your team AI‑native

Scattered tools slow teams down. Every Teams gives your whole organization full access to Every and our AI apps—Sparkle to organize files, Spiral to write well, Cora to manage email, and Monologue for smart dictation—plus our daily newsletter, subscriber‑only livestreams, Discord, and course discounts. One subscription to keep your company at the AI frontier. Trusted by 200+ AI-native companies—including The Browser Company, Portola, and Stainless.

Let’s take GDPval. Humans decided to undertake it in the first place: They picked the industries and occupations (44 roles across the nine biggest GDP sectors), while professional “task writers” picked the specific scenarios in which to use the AI and created the prompts. The prompts themselves are quite detailed; in the wholesale sales analyst example, the prompt spells out the situation, data structure, business rules, and deliverables in painstaking detail. It specifies the exact columns in the spreadsheet attached to the prompt and even explains the company’s packaging rules—for example, that some products are shipped individually while others must be shipped in full boxes of a certain size.

Again, it is an incredible feat that AI can now follow all of these instructions. But in order to properly measure the AI’s performance, we’ve had to reduce jobs down to tests. And real jobs don’t work as systematically all the time: They happen in dynamic, shifting environments—where the problem itself changes halfway through, the right context has to be inferred, or people disagree about what “good” even means.

If you look closely, you’ll find that there is an enormous amount of human labor involved in the creation of these tests—picking, scoping, structuring, and evaluating the outputs of the AI models.

That labor seems a lot like a job to me! In fact, it seems exactly like the kinds of things you would expect workers in the allocation economy to be doing: deciding what needs to be done, scoping the task, selecting resources, and judging what’s good.

So next time you see a breathless headline about AI automating all jobs, take a beat.

Where we’re going, there’s still plenty of work to go around.

Dan Shipper is the cofounder and CEO of Every, where he writes the Chain of Thought column and hosts the podcast AI & I. You can follow him on X at @danshipper and on LinkedIn, and Every on X at @every and on LinkedIn.

We build AI tools for readers like you. Write brilliantly with Spiral. Organize files automatically with Sparkle. Deliver yourself from email with Cora. Dictate effortlessly with Monologue.

We also do AI training, adoption, and innovation for companies. Work with us to bring AI into your organization.

Get paid for sharing Every with your friends. Join our referral program.

Was this newsletter forwarded to you? Sign up to get it in your inbox.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

Comments

Don't have an account? Sign up!