By my count, Google launched 23 products at yesterday’s developer conference.

Well, launched isn’t the right word. It was more akin to when your cousin starts posting the back of some dude’s head in her Instagram Stories and you realize she has a boyfriend. In that spirit, let’s say Google soft-launched 23 products, even though it only actually released two. One was an open-source large language model, and the other was the integration of its Gemini 1.5 Pro model into its chatbot mobile app. Everything was about artificial intelligence: Executives said “AI” 120 times in the space of two hours. If AI was the most common term, I would venture to guess that the second most common was “coming soon.”

What yesterday’s presentation did demonstrate was power. Google’s search engine, which is arguably the best business model ever invented, throws off an incredible amount of excess capital. That cash cannon is now firmly pointed at AI. The company previewed new hardware, new AI integrations into consumer products, new chips, and new models.

When a technology paradigm shift is underway, it is not yet clear what will work and where the profit will pool. Startups have to pick their bets, while big companies have the benefit of being able to bet on everything at once. Google is in this happy latter position. CEO Sundar Pichai will invest in as much as he can and hope that it doesn’t disrupt the search engine cash cow too much.

The company is throwing a bunch of stuff at the wall, but these are the things I think will matter the most:

- Agentic search: Google is aiming to go from normal search to where “Google does the googling for you.”

- Google in Android: Google is creating a virtual assistant that will come bundled at the operating system level.

- Gemini 1.5 everywhere: From its Google Sheets to Google Photos to Gmail products, Google is integrating the ability to query, search, and summarize your data.

Let’s go through each of these in more depth, talk about where power will accumulate, and look at what this all means for Google’s competition.

Google does the googling

Google’s vision is to transform its search engine into what I call an answer-and-action engine. When you search for “Thai food near me,” you want this information, but you really just want to stuff your face with pad thai as quickly as possible. Until now, you might have Googled the question, scanned multiple restaurants, clicked through some sites, read menus, then finally pulled up Doordash to order the food. Its vision is, in effect, that every workflow that starts at the search bar ends at the search bar. “Google does the googling” means that an agentic search agent understands the context of your questions, answers them, and then does the actions for you, removing the middle steps in the process. It’s your personal agent, not just your guide to what’s on the internet.

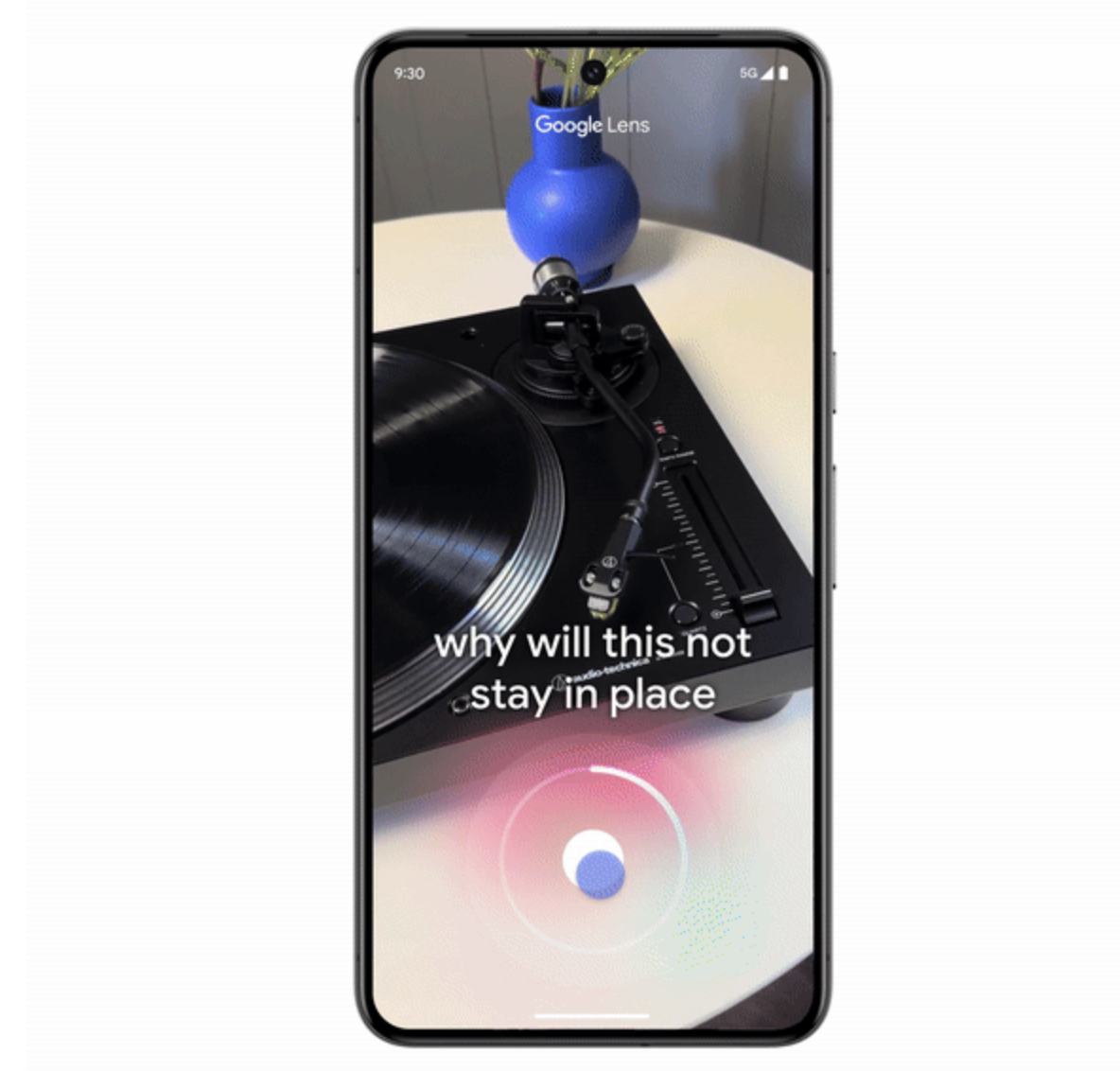

The search query can be text-first or vision-first, using the multi-modal models Google announced at the conference. Just point the camera at something and ask a question.

Source: Google.Google’s agent engine could automate most of the ways that we currently use the internet. While Google started hinting at this idea last year, I am surprised at how quickly it is going all in. Yesterday it was announced that everyone will be able to get results like these “soon.” Investors should be worried: If Google pulls this off for users, it might be terrible for its existing revenue. Will Google be able to stuff the same amount of ads into this type of software? I doubt it. Maybe the hope is that by moving from search to answer/action, it will increase the overall usage of its products and make up the difference that way. If Google is cannibalizing its existing business to plow forward on AI, that might hurt investors in the short term until a new business materializes.

More broadly, it could be that human beings moving pixels from one box to another was a blip in the long arc of technological progress. We spend an inordinate amount of time transcribing data and then using that data to perform knowledge labor—a product known in the industry as workflow software. Up to this point, workflow software was made by coding specialized flows of user actions. The magic of LLMs is that they are generalizable—there’s no need for new pieces of software for each workflow. Instead, an action engine can just do work for you. As one founder who has raised $50 million for their AI startup put it to me, “LLMs give you features for free.”

Do Androids dream of Sundar’s sheep?

There are three ways for an LLM to learn workflows.

First, it can use a multi-modal model to watch you, FBI-style, by snooping in your browser. It’ll learn what to do by watching videos or images of user actions that you do in Chrome.

Second, it can learn from you telling it what to do (this is sort of what a prompt is).

Finally, and most interestingly, the LLM can receive permission to access your device and learn everything you do in context. The AI app transforms into a meta-layer that learns and automates away all the stuff you used to do in individual apps. Google made this a big focus in its event yesterday: “Soon, you’ll be able to bring up Gemini's overlay on top of the app you're in to easily use Gemini in more ways.”

Said in non-corporate speak: Google is integrating LLMs into the Android operating system.

This third use case is a disaster for many software applications. During Apple’s WWDC conferences in the early years of the iPhone, each yearly software release would destroy a huge number of startups as the company integrated its utility directly into the phones themselves. The end state of today’s AI shift might mimic what happened in the 2010s tech boom, when the startups that produced the largest amounts of value used workflows to build network effects. The value of Airbnb isn’t the software, it’s the network of homeowners and guests. AI’s ability to create workflow automations on the fly just increases the importance of getting distribution advantages to build network effects.

Unlike OpenAI, Google and Apple each have their own operating systems and web browsers. They can watch people’s work in their browsers and what they do in their applications via the OS. It’s likely no accident that OpenAI released its most powerful model the day before Google announced its own intentions. The ChatGPT maker desperately needs both network effects from consumers relying on its chatbot and a way to learn user actions on its desktop app; otherwise, power will accumulate higher up in the tech stack, at the operating system or browser level.

Gemini everywhere

The last, most important update is that Google is integrating LLMs into all of its most popular applications. This should surprise no one. Sprinkling delightful bits of intelligence into stuff people pay for is AI 101.

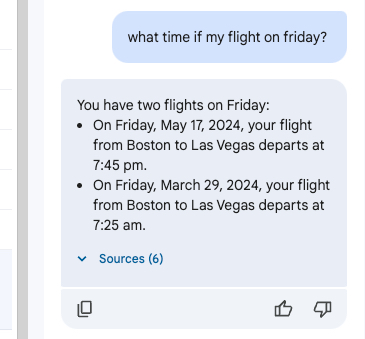

There is one slight issue—it doesn’t appear to work. Yesterday, I posed questions to a Gemini chatbot embedded into my Gmail account and received error after error. Here’s one quick example: I get too many emails and always lose track of my flights. I have one coming up this week and hoped Gemini could help.

Source: All screenshots courtesy of the author.

Sure, I had a spelling error, but was that the reason Gemini told me that I needed to travel back in time to March 29 to catch a flight?

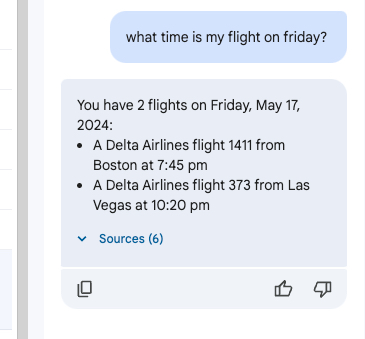

I tried it again without the spelling error. Thankfully it didn’t make the same mistake. Instead, it made a new one.

This second flight is a hallucination unrelated to anything at all.

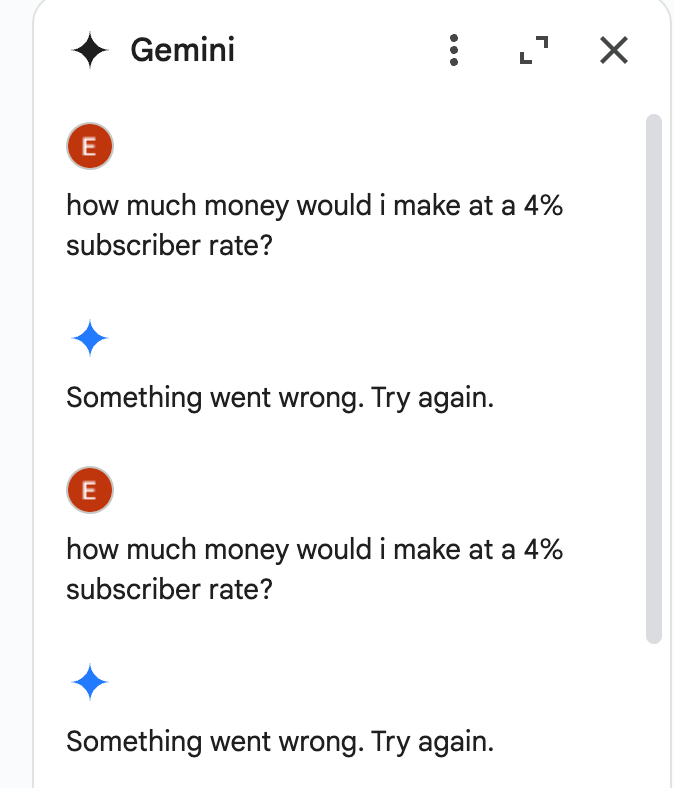

Alright, well, email is hard. Over to Google Sheets. This time, the software just bugged out—even when I asked it twice.

In frustration, I asked, "Well, what can you do?”

Perfect! Charts. Nice and easy. Annnnnnd it failed.

This time it couldn’t do what it told me one message ago that it could do.

I tried out queries across Google’s apps and would (generously) estimate a 10 percent success rate. It could be that I had a statistically unlikely and unfortunate experience, but I doubt it.

It’s not about the amount of power you have—it’s how you use it. No founder worth their salt would ever allow such crappy software to be shipped. Adding LLM to software works, but only when you can have a focused experience on which you can deliver. This level of failure from Google is bullish for the startup ecosystem because it is an early indication that the company is too big to deliver incredible software at every level. The problem isn’t with the LLMs—it's that Google is under immense, top-down pressure to deploy, even if the applications aren’t ready. Demoing these apps was a disappointing experience and one that is typical of Big Tech’s Gantt chart-driven products.

To be fair, Google does have a hedge if its apps are bad. Founders can build using Gemini’s APIs or run their compute on Google Cloud. But if this is the outcome—if Google’s apps lose their dominance and it becomes more of an infrastructure provider—it’ll have murdered the golden goose to replace it with the tin turkey. If Google is going to be rolling out these updates “soon” to all its customers, I would hope that it has something that actually works.

Our multi-modal future

Next week, Microsoft is also hosting an AI-focused event. (Every CEO Dan Shipper and EIC Kate Lee will be there in person for that one.) We imagine that the company will make a similar bevy of announcements. There will probably be a multi-modal model that can interpret video, there will be integrations of LLMs into productivity apps, and Bing will get a more agentic experience. With its partnership with OpenAI, Microsoft is in a slightly different spot than Google, but it is still remarkable that every tech giant is converging on similar planes of competition, with comparable technology. OpenAI has thus far had an advantage on the model front, but in every other aspect, Big Tech has an advantage—in capital, products, and distribution. The question is: What is the better position to be in?

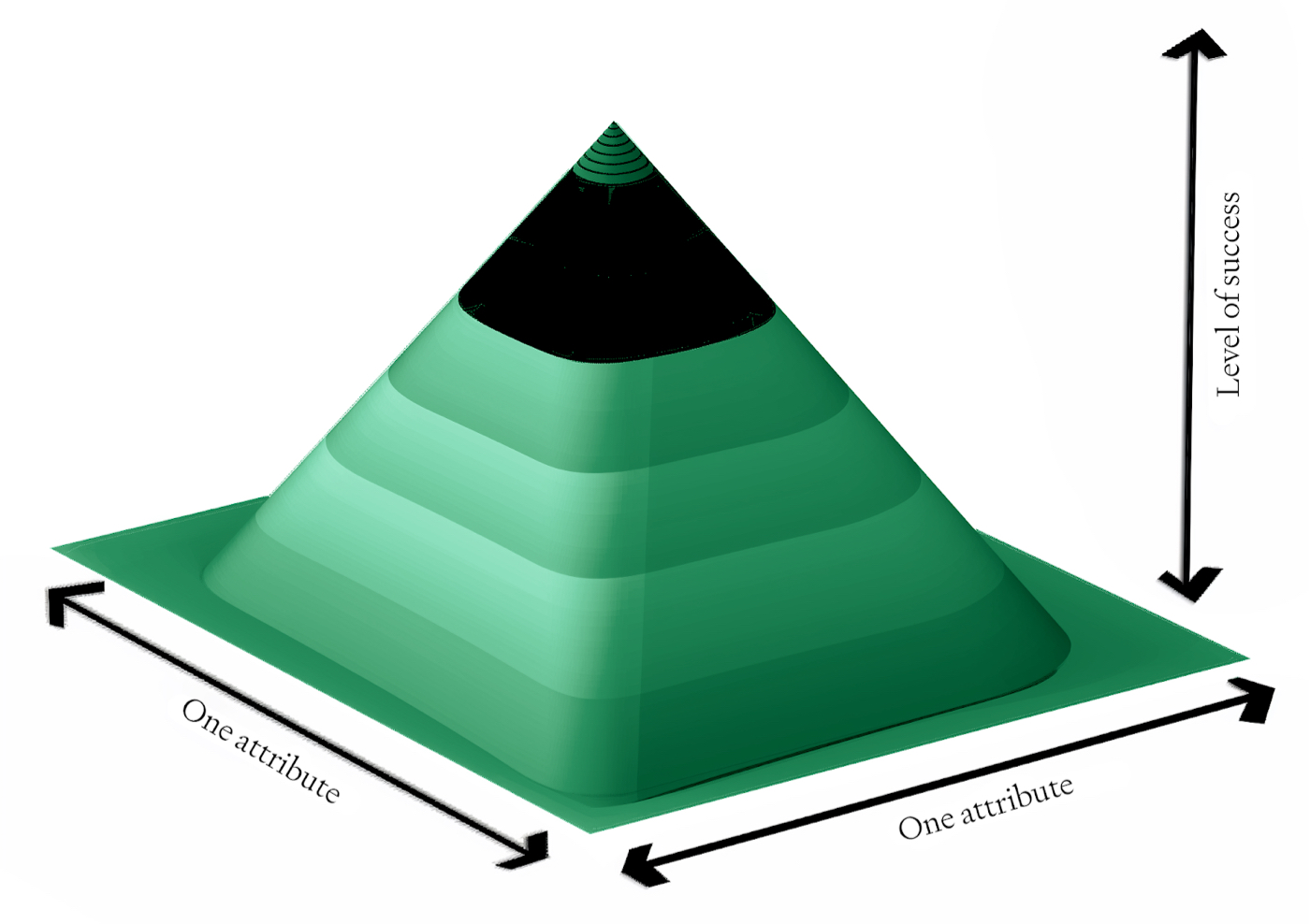

One of my favorite strategy frameworks is a little-discussed theory from 1997 called "rugged landscapes." It is a way to understand complex adaptive systems that uses the metaphor of a landscape with multiple peaks and valleys to represent different possible configurations of a system, with the height of each point representing its fitness or performance. While it is famously dense (like yours truly), there is a single point from it that I want to apply: Picture a mountain, where the summit represents the greatest profits available to a company.

Source: Every illustration.Companies navigate the landscape by changing their positions along various dimensions of competition, such as product features, target markets, or business models. When you think of a market, the temptation is to think of it as a stacked bar where companies divide portions of the available revenue. For example, OpenAI has roughly $2 billion in annualized revenue, so it has a large percentage of the AI chatbot market. If you followed the natural tendency of most investors, that position would imply that OpenAI has a dominant position. However, a market is not a single mountain to climb—it is a rugged landscape.

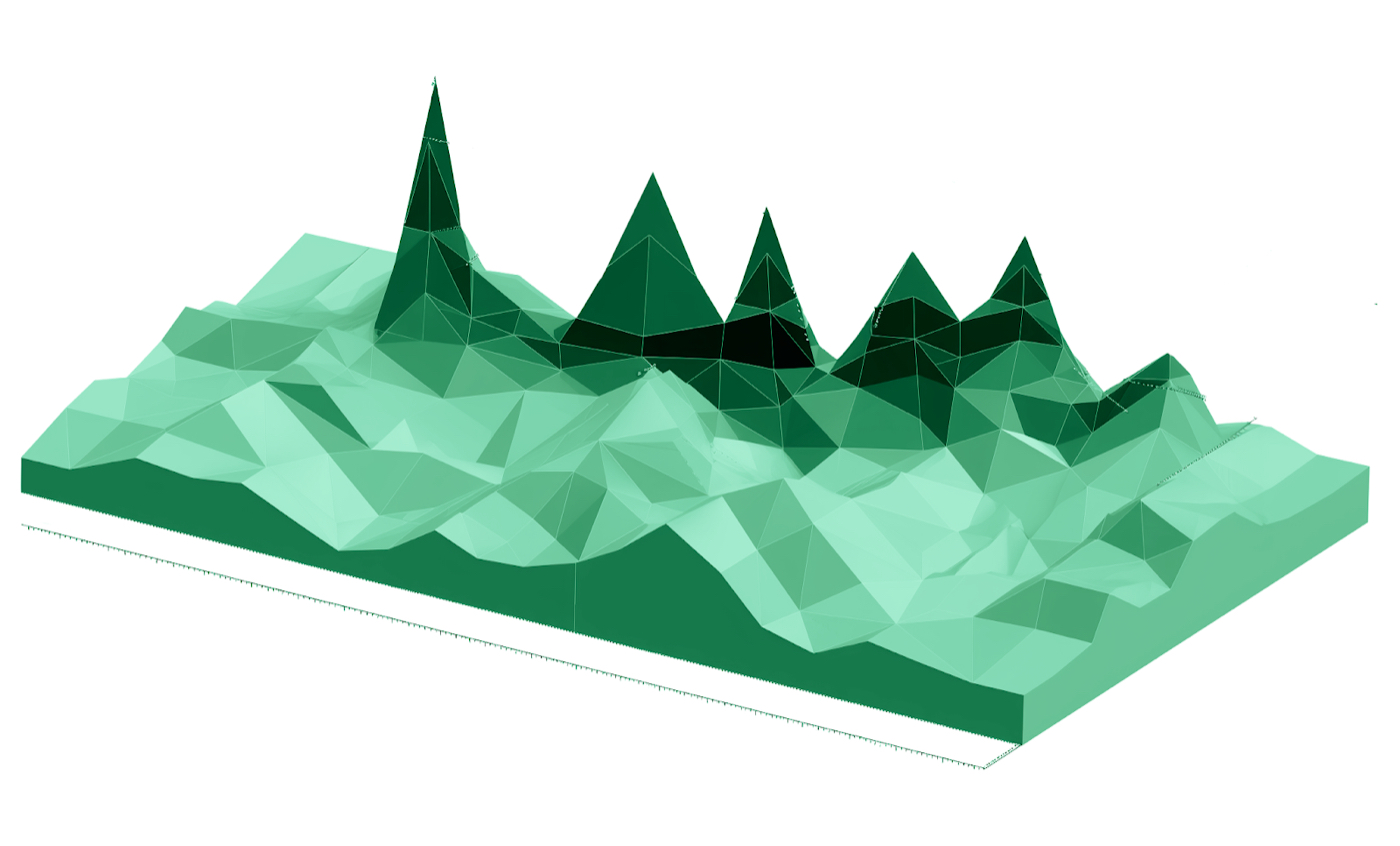

Source: Every illustration.There are multiple peaks or places where profitability (or pick your performance metric of choice for the Y axis) can reach its zenith. Moreover, the landscape itself is dynamic, with new peaks emerging and old ones eroding as technology advances and market conditions change. It’s challenging for companies to identify and adapt to the most promising opportunities.

When we talk about the AI chatbot market, one peak might be the profit available for consumer subscriptions like ChatGPT. However, it can also encapsulate the much larger mountain range of productivity software. So while companies like Google and Microsoft sit on top of Everests of profit compared to OpenAI’s mole hill, they’re not necessarily in the best position.

The rugged landscape framework is an argument against the sunk-cost fallacy. The temptation of the latter is to dismiss the starting position of an organization when it attacks a new opportunity like AI. However, rugged landscapes help strategists grok that what matters is not only where you start, but also how far away you want to go. And because companies are constantly shifting, there can only be one king of the hill for each individual mountain. To make this even more difficult, a landscape isn’t static—it is constantly shifting. Mountains of profit grow or shrink.

Google and the other tech giants have the advantage of being on top of the tallest mountains of profit ever conceived. AI is an avalanche in the software landscape. We are watching mountains arising in real time. The question, unanswered and ever-present, is where the new profit peaks will appear. Does focused execution win? Or does breadth and distribution? For now, it appears to be the former.

Evan Armstrong is the lead writer for Every, where he writes the Napkin Math column. You can follow him on X at @itsurboyevan and on LinkedIn, and Every on X at @every and on LinkedIn.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.08.31_AM.png)