.png)

Was this newsletter forwarded to you? Sign up to get it in your inbox.

Google's Gemini models may not dominate conversations (or searches) like OpenAI’s—but they’re starting to dominate something more important: the developer software stack.

Inside Every, Gemini 2.5 Pro and Gemini 2.5 Flash are already powering production workflows, and Flash runs quietly in the background of products like Cora and Sparkle. Our team is hardly the only one getting mileage out of these models, though; Pro has become the default brain inside go-to AI-powered developer tools Cursor and Windsurf. And according to Google Cloud CEO Thomas Kurian, more than four million developers are building with Gemini

With a fresh update to Gemini 2.5 Pro landing this week that’s meant to have stronger coding support and wider developer access, Google’s bid to win the hearts and minds of developers is getting harder to ignore.

Let’s dig into what Pro and Flash do best, how the Every team is putting them to work, and why Gemini could be the backend stack’s dark horse.

Gemini 2.5 Pro: The quietly powerful workhorse

Gemini 2.5 Pro debuted in March 2025 as Google’s first “thinking model,” a territory previously mapped by OpenAI with its o1 release in September 2024 and Anthropic with the release of Claude Sonnet 3.7 in February 2025. A thinking model, also known as a reasoning model, is an LLM that pauses to plan a step-by-step solution before answering. That extra planning, plus a 1 million-token context window—enough to read an entire codebase, a full research report, or about an hour of video—lets it handle problems other models have to tackle in bite-sized chunks.

On May 6, ahead of its annual I/O conference later this month, Google announced an update to 2.5 Pro (you may see it referred to as “Gemini-2.5-Pro-05-06,” in case you thought OpenAI was the only one with naming challenges). This launch touts sharper coding skills, richer web-app demos (click-to-try sample sites that let anyone play around with the model inside a browser), and, crucially, general access in AI Studio (Google’s free playground for quick experiments) and Vertex AI (its managed cloud service for production workloads). In other words: It’s easier to try, and far simpler for companies to roll straight into their apps.

What it’s great at:

- Coding and debugging at scale: Pro remembers details from massive context dumps and often catches and corrects its own logic.

- Long-context planning: Handles multi-turn planning, where each new prompt builds on the last, and can steer big engineering jobs, such as rewriting or reorganizing an entire codebase.

- Multimodal reasoning: Solid performance across text, code, and images in the same prompt thread.

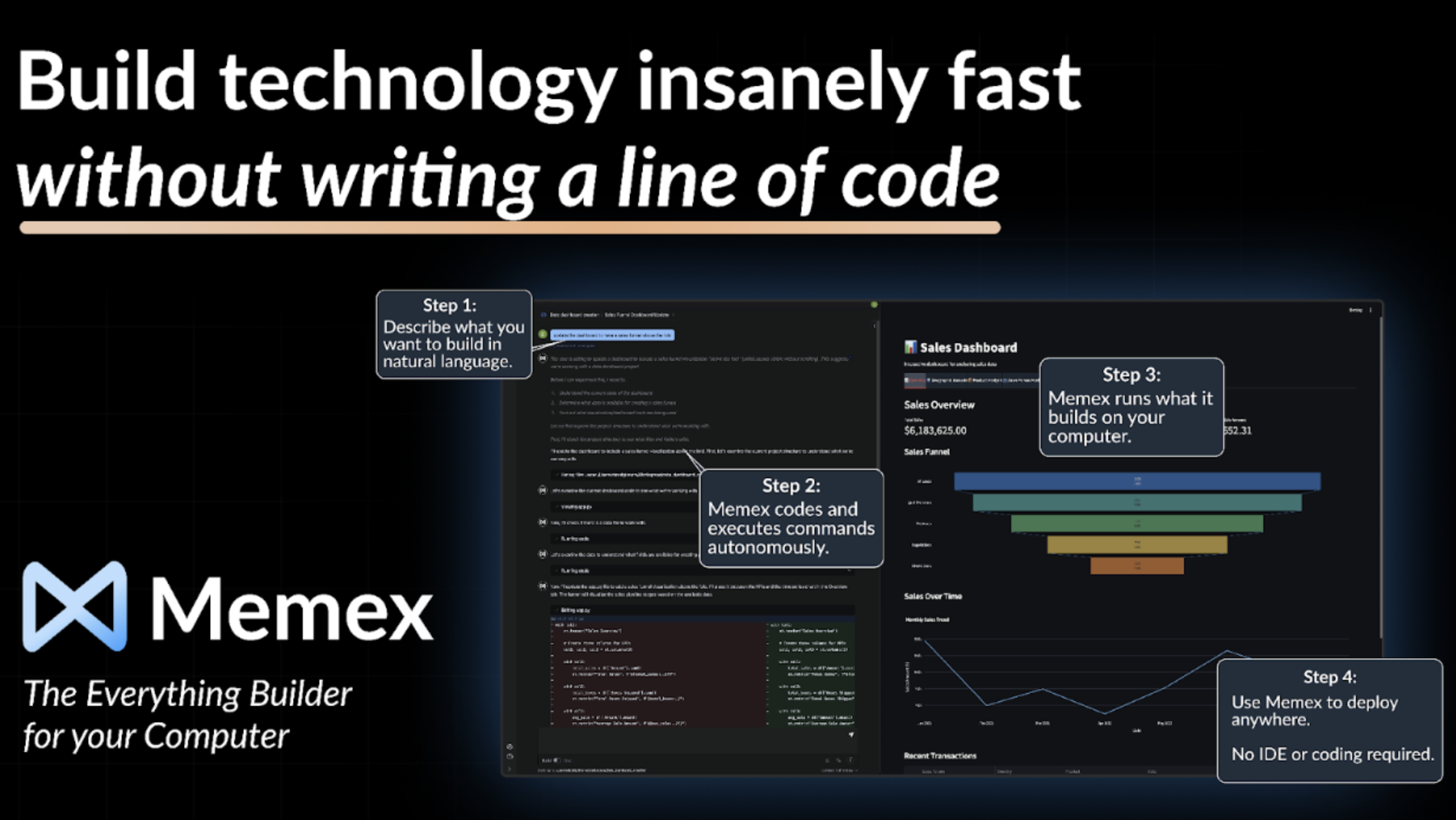

The perfect technical cofounder

Memex, a new AI pair-programmer that launched last week, can build anything you describe in natural language—no need to know how to code. It operates on any tech stack, lives alongside the files in your computer, and can deploy to any platform. Builders have built over 10,000 projects since launch, including medical research tools, hotel CRM software, AI apps, and even high-quality trading bots. Build faster, all on your own.

Download Memex today and use the code "Every" for 1,000 extra free credits.

Gemini 2.5 Flash: The glue model with speed control

Gemini 2.5 Flash landed in mid-April as Google’s first hybrid-reasoning model—designed to be fast by default, but able to “pause and think” when a task gets tricky. It’s like developers got a thinking-budget knob (0–24,000 tokens) that trades cost and latency for extra brainpower: Leave it at 0 for 2.0-level speed, or bump it up when problems need multi-step logic.

Google calls this its best price-to-performance option. Because most requests (known as “calls”) a developer sends to the model are lightweight (for tasks like routing, re-formatting, or quick look-ups), teams can keep 90 percent of their work with Flash at rock-bottom prices and reserve extra reasoning—and cost—for the rare tasks that require it, like drafting a complete product-feature spec from scattered meeting notes.

What it’s great at:

- Low-latency orchestration: Flash acts like a real-time dispatcher—cleaning up AI responses, deciding where each request should go (like a heavier-duty reasoning model for complex questions or an external API for fresh data), and tagging or sorting huge streams of data without slowing anything down.

- Programmable reasoning control: Teams can dial up or down how much “thinking time” the model spends on each request—aka its inference depth. Less thinking time means fast, cheap answers for simple tasks; more thinking time lets the model pause, reason through several steps, and return a more thorough solution (at the cost of a few extra tokens and milliseconds).

- Multimodal on a budget: Handles image input with surprisingly strong results—at a fraction of what Claude or GPT-4 charge.

What everyone at Every thinks

… about Gemini 2.5 Pro

It’s the new default inside Cursor—because it just works

“I love how powerful it is. Its large context window and reasoning capabilities mean I can dump in a lot of context ,and I feel pretty confident in using it well (remembering certain implementation details from some random file I gave it several messages ago, etc.)”—Danny Aziz, general manager of Spiral

Tool calls and comment spam are a problem

“Tool calls, at least within Cursor, are still a bit problematic—sometimes it generates code inline instead of applying changes, so you have to do it manually. That’s kinda annoying. Another annoying thing is the comments [explanatory lines throughout the file, which add clutter and degrades performance]—it adds a lot, and I always have to ask it to remove them. But other than those two things, it’s really great.”—Naveen Naidu, entrepreneur in residence

…about Gemini 2.5 Flash

The Swiss Army knife of large-scale prompting

“I’ve used Flash 1.5, 2.0, and now 2.5. It’s extremely fast and cheap, which is perfect for prompting tasks like quick classification, formatting outputs, or acting as a fuzzy matcher at scale. Plus, it’s multimodal and understands images at a ridiculously low cost, which is so cool.”—Alex Duffy, head of AI training for Every Consulting and staff writer

Cora’s steady workhorse

“I think Flash is the most interesting. It's the workhorse for AI companies—it’s so cheap and very good.”—Kieran Klaassen, general manager of Cora

Gemini’s consumer app? Hard pass.

Members of Every Studio use Gemini only via API, Playground, Windsurf, or Cursor. “I can’t bring myself to open the Gemini app,” Danny admits—echoing the group consensus that Gemini’s power shows up in integrations, not interfaces.

What everyone else thinks

…about Gemini 2.5 Pro

“Call it Gemini 3!”

Cofounder and CTO of AI cloud company Hyperbolic Yuchen Jin says the new-and-improved Gemini 2.5 Pro is strong enough to warrant a name of its own. He calls the new version his “top coding model,” highlighting the fact that it beat both o3 and Claude 3.7 Sonnet on hard prompts like simulating water splashing in a bucket—a task that has eluded computers since the advent of CGI.

“A very articulate thinking process”

University of Pennsylvania mathematician-engineer Robert Ghrist says Gemini 2.5 Pro’s user-visible reasoning is the most articulate he’s seen. He praises its calm, clean, step-by-step thought chains as far more useful than GPT’s terse notes or Grok’s frenetic dumps.

“A full-fledged game on the first attempt”

Machine-learning educator Parul Pandey showed the new update of 2.5 Pro an image of a concept for a children’s game and asked it to code up a version. One pass later, she had a working demo.

… about Gemini 2.5 Flash

“Just as good at coding”

Indie developer Haider (@slow_developer) has been using Gemini 2.5 Flash to optimize database queries and calls it “just as good at coding as the Pro version.” He highlights Flash’s speed and responsiveness, saying Google’s 2.5 series is “just too good.”

“Too cheap to meter”

AI assistant Abacus.AI founder Bindu Reddy calls Gemini Flash 2.5 the future: small, fast, and wildly affordable. Starting at $0.10 per 1 million input tokens and $0.60 for output at the lowest price setting (compared with GPT-o4-mini at $1.10 in and $4.40 out), she says models like this will dominate as performance keeps climbing and prices keep dropping.

“Cheaper, faster, just as smart”

YouTuber Theo says Gemini 2.5 Flash is scoring on par with DeepSeek R1—but with a major advantage: It’s both cheaper and faster. “Wild,” he adds, flagging it as a serious contender in the LLM race, even when faced with open-source competitors like DeepSeek that some might prefer because you can run them on your own computers and tweak every part of the model to suit your exact job.

How they stack up against the competition

Gemini 2.5 Pro vs. GPT-o3

OpenAI’s o3, released last month, is the company’s contender for deep planning and code work. It delivers strong performance, but at a steep price: $10 in and $40 out per million tokens and room to “remember” about 200,000 tokens at once (a short novel’s worth of text). Gemini 2.5 Pro plays in the same reasoning league, yet costs just $1.25 in and $10 out and can juggle 1 million tokens in its working memory. That’s eight times cheaper to feed and four times cheaper to read, with five times more room to keep context in mind.

If your workload involves sprawling codebases, multi-step product planning, or other long-form tasks, that price-plus-memory edge can trim thousands per month in costs without a noticeable drop in reasoning quality. o3 still has the upper hand when you need the model to reach out and run other software for you—like triggering a database query, searching the web, or calling an external API—as part of its answer. Its prose is also a bit cleaner. But when raw bang-for-your-buck matters, Gemini 2.5 Pro delivers near-o3 brainpower—minus the sticker shock.

Gemini 2.5 Flash vs. GPT-4o-mini and Claude 3.7 Sonnet

Flash isn’t trying to be the smartest model in the room—it’s trying to be the fastest, cheapest one that’s smart enough. It’s significantly more affordable than models like GPT-4o-mini or Claude Sonnet, and flexible enough to adapt to whatever level of thinking you need. If you're running lots of prompts and watching your budget, Flash is tough to beat.

What we’ll be watching

Strategically, Google’s reach gives Gemini a unique edge. Android now powers over 3.5 billion active devices, Chrome serves roughly 3.4 billion users, and Gmail reaches more than 2 billion people worldwide. With numbers like these, Gemini doesn’t need to win every mind-share battle—Google can weave it into the services billions of people already touch each day, turning sheer scale into a gravitational pull that rivals will struggle to escape.

Add to that the coder-friendly capabilities that slot neatly into workflows across the stack, and you get Google quietly winning the operations and infrastructure layers—a long game that may prove smarter than splashy chatbots.

Questions we’ll be tracking:

- Will Gemini 2.5 Flash remain the go-to cheap-and-speedy “glue” for AI workflows, or will nimble open-source mini-models gain ground?

- Can Google fold Gemini into Android, Chrome, and Workspace by default—without turning developers off in the process?

- Will Google keep prices low and its roadmap clear enough to hold developers’ trust, or will confusing updates and policy slip-ups erode the momentum?

Those answers will determine whether Gemini stays the weird kid at lunch or graduates to class president of the backend stack.

Read our previous vibe checks on OpenAI’s o3, GPT-4.1 and o4-mini, GPT-4o image generation, Claude 3.7 Sonnet and Claude Code, and OpenAI’s Sora.

Katie Parrott is a writer, editor, and content marketer focused on the intersection of technology, work, and culture. You can read more of her work in her newsletter.

We build AI tools for readers like you. Automate repeat writing with Spiral. Organize files automatically with Sparkle. Write something great with Lex. Deliver yourself from email with Cora.

We also do AI training, adoption, and innovation for companies. Work with us to bring AI into your organization.

Get paid for sharing Every with your friends. Join our referral program.

The Only Subscription

You Need to

Stay at the

Edge of AI

The essential toolkit for those shaping the future

"This might be the best value you

can get from an AI subscription."

- Jay S.

Join 100,000+ leaders, builders, and innovators

Email address

Already have an account? Sign in

What is included in a subscription?

Daily insights from AI pioneers + early access to powerful AI tools

.png)

.png)

Comments

Don't have an account? Sign up!

A super interesting article, however when reading about the frontier reasoning models I always find myself wishing that there was more commentary about the incredible use cases they have for your 'average knowledge worker' and/or senior leaders.

Gigantic context windows + multi-turn, high-powered strategic thinking = super powers for desk workers and strategic leaders.

These models are not just great for coders.